- Putting older hardware to work

- Colony West Project

- Adding the GT 620

- Follow-up on ASRock BTC Pro and other options

- More proof of concept

- Adding a GTX 680 and water cooling

- Finalizing the graphics host

- ASRock BTC Pro Kit

- No cabinet, yet…

- Finally a cabinet!… frame

- Rack water cooling

- Rack water cooling, cont.

- Follow-up on Colony West

- Revisiting bottlenecking, or why most don’t use the term correctly

- Revisiting the colony

- Running Folding@Home headless on Fedora Server

- Rack 2U GPU compute node

- Volunteer distributed computing is important

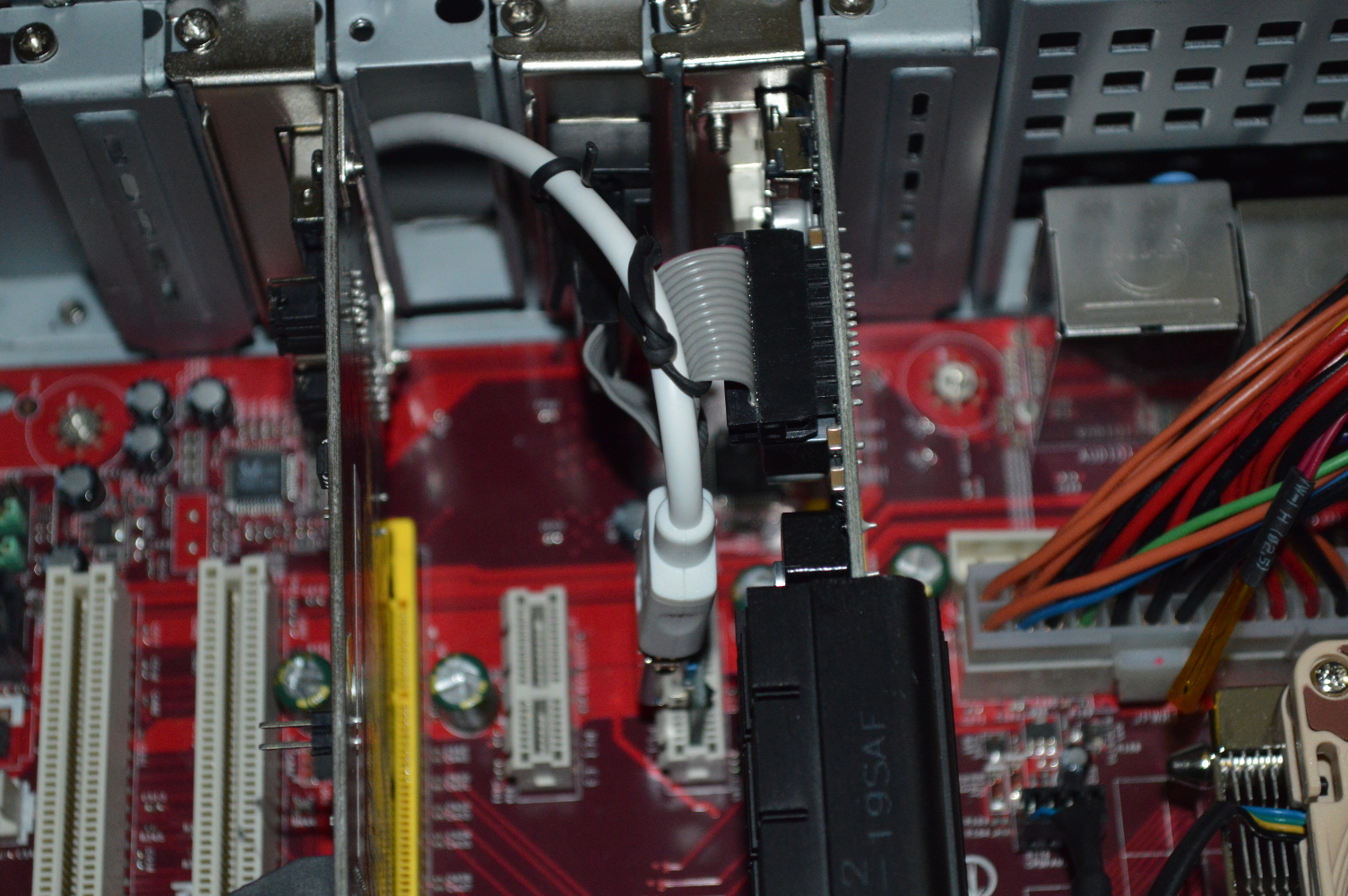

I’ll just say this up front: the ASRock PCI-E extender can be a little finnicky. For some reason, the GT 620 would stop being recognized by the BOINC client after a period of time, despite the card still being detected by the system. I believe the reason for this was the SATA cables.

I wasn’t using the stock cables that came with the kit. Those are flat, and I was using round cables. But I didn’t initially realize they weren’t the exact same length. So while they looked to be running fine for a period of time, I guess eventually there would be enough latency that the data across the two cables would get out of sync with each other and it’d stop working right. So I changed the cables over to two of the same length, but I didn’t leave the GT 620 connected to it.

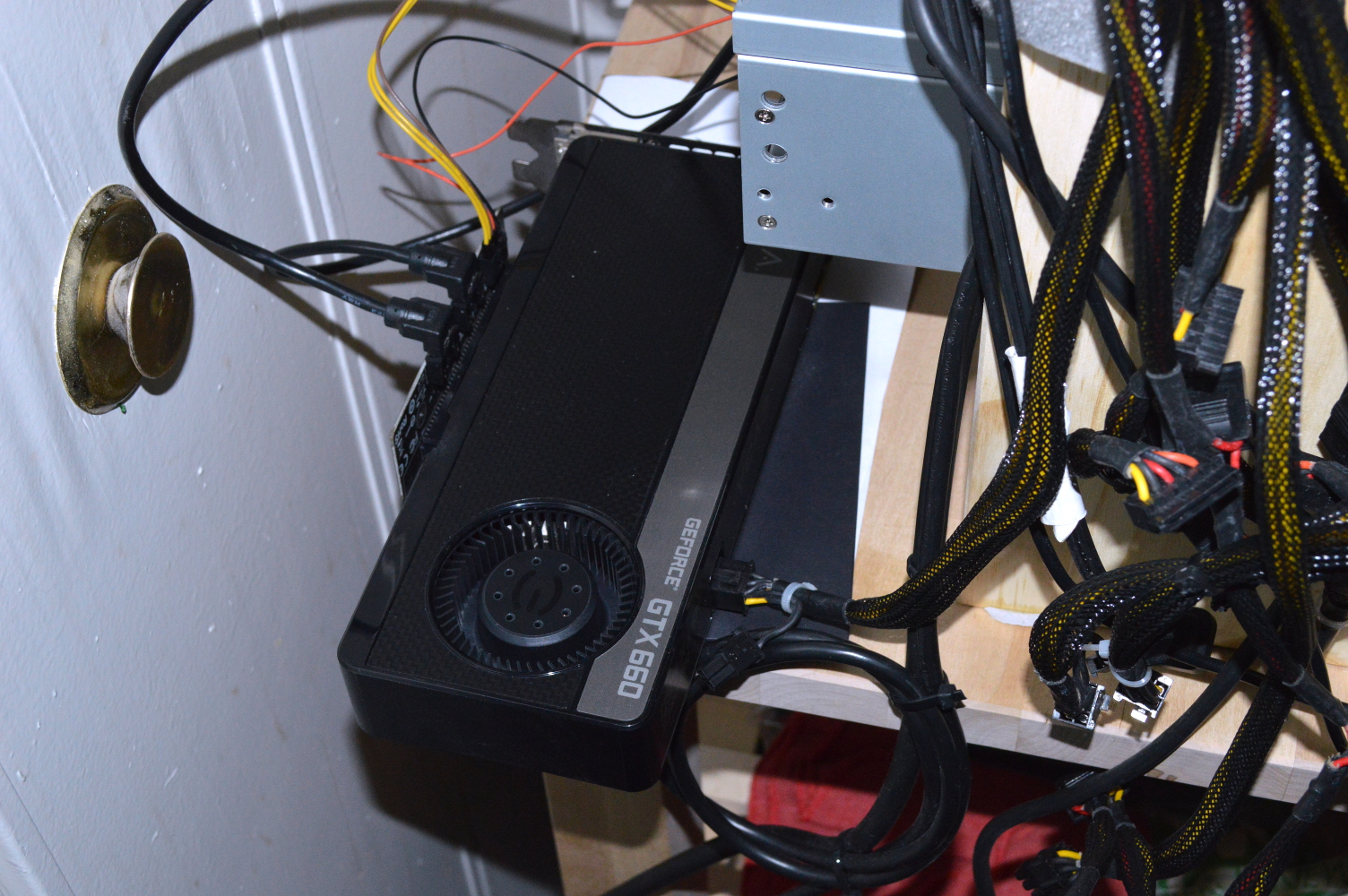

Instead I connected one of the GTX 660s. And to ensure it was getting adequate power, I made sure to plug it up to a second power supply I had laying around (Corsair GS800) — not entirely sure if it’s completely necessary, but I’d rather have it than not. The card sat at just under 60C with a fan speed of 40%. Having a fan blowing on it — Corsair SP120 with a low-noise adapter — lowered the temperature a little, but also allowed for cool air to mix in with the hot air coming out the back.

The reference blower isn’t the greatest for keeping a graphics card cool — which is why the various brands sometimes make their own coolers for these cards. So when I actually build this out into a final configuration, it’ll likely be water cooled. The GT 620 runs cooler than the GTX 660 — but it’s also not able to process nearly as quickly, so putting a universal VGA block on it probably won’t offer any significant benefit.

But the GTX 660 could pull over 45 GFLOPS consistently according to the BOINC client, well over 2½ times the GT 620. So if I take the two GTX 660s plus the GT 620 together combined, it should get about 110 GFLOPS easily. For those wondering how I’m getting that number, I take the “estimated GFLOPS” for a task and divide it by the total processor time when it completes.

But this also shows that PCI-Express 1.0a x1 — which is a 2 Gb/sec lane — is not a bottleneck in this setup. The USB 3.0 cable can support up to 5Gb/sec, which is enough to handle either PCI-Express 1.0 x2 or PCI-Express 2.0 x1. The two SATA III data cables on the ASRock kit could handle 12 Gb/sec in parallel.

The GTX 660 is performing quite admirably compared to the GTX 770s in Beta Orionis, each of which are on PCI-Express 2.0 x16 slots and powered by an FX-8350. I wouldn’t expect it to perform up to the same level as a GTX 770, but staying above 45 GFLOPS compared to the GTX 770’s 64 GFLOPS average compares quite well to specifications I’ve seen online for both processors. The 770 is basically the same core as the GTX 680, though.

I’d actually be interested to see where a GTX 780 would perform, or even a GTX 980. Would the PCI-Express 1.0 lane be a bottlenec for the latest generation?

But if you want to put GPUs to work for BOINC or something similar, you don’t need the newest generation mainboard and processor — the nForce 500 SLI chipset on the MSI K9N4 SLI mainboard was released in 2006.

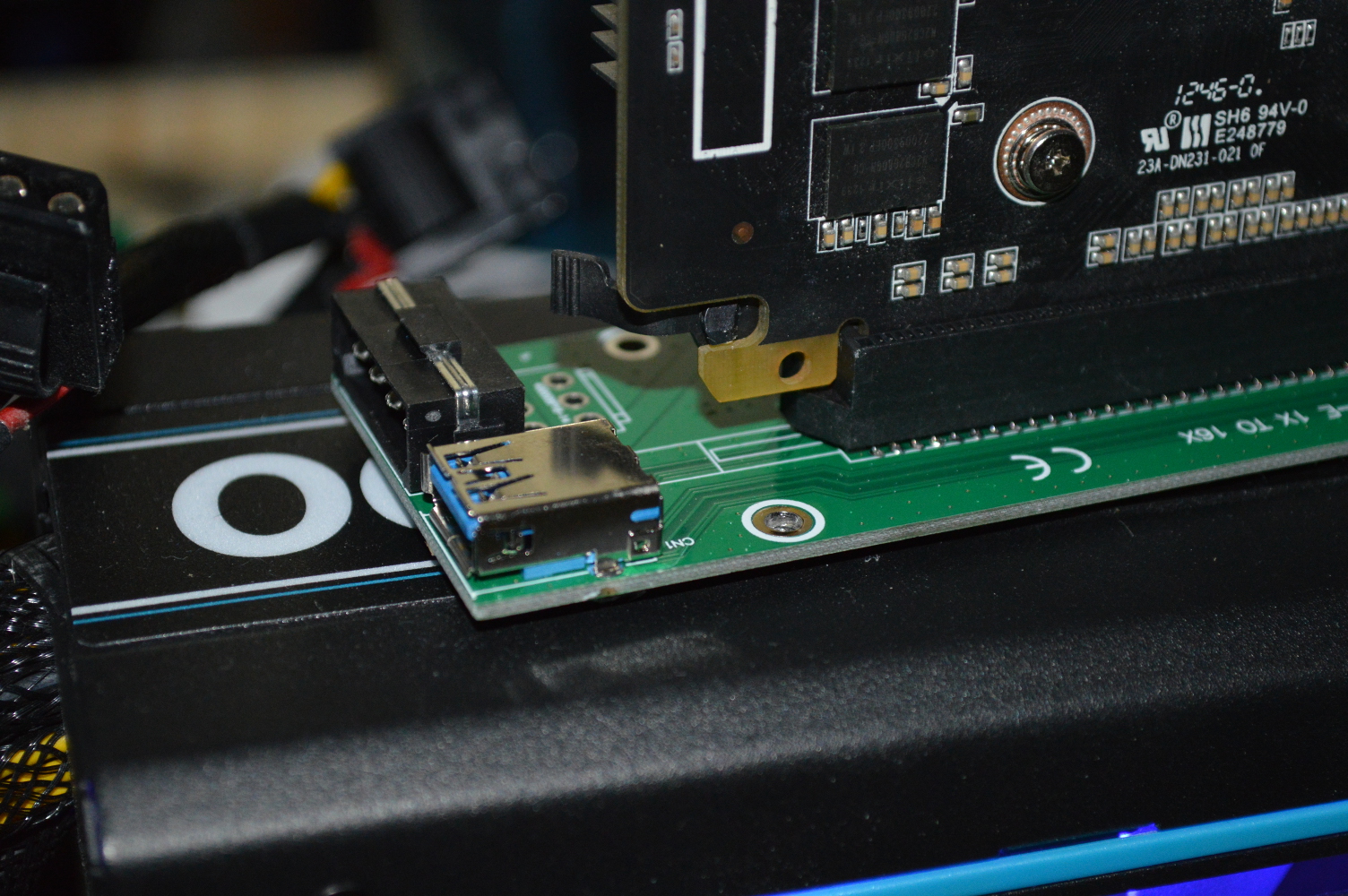

USB 3.0 PCI-Express extenders

The USB extenders arrived on Saturday. I was really stoked to use one since they are powered by a standard 4-pin Molex connector, and use a standard USB 3.0 male A to male A plug.

USB cables can be longer than SATA cables. The extenders I ordered come with 1m cables. Most SATA cables included with devices (such as the ones included with the ASRock BTC kit) are 6″ or 9″ at most, though you can buy cables that are longer. The maximum length of a SATA cable is also 1m — the ones I was using for the above experiment were about 18″.

There’s another consideration on this: USB 3.0 male A to female A panel cables.

Imagine this. Two or more graphics cards mounted into a 4U chassis — powered by its own power supply — with a USB 3.0 cable connecting it to the main system. This was the consideration I was talking about previously. This would allow you to create one system in either a 1U or 2U chassis, depending on cooling requirements, that acts as the device host while having any configuration of graphics cards in a second chassis connected only by several USB cables.

Now while similar SATA III brackets do exist, the cable length must still be considered — you are still tied to the 1m length limitation which will include the length of the cables used in the brackets. So if you by two brackets and they each have 12″ cables, you can only use a 12″ SATA cable between them.

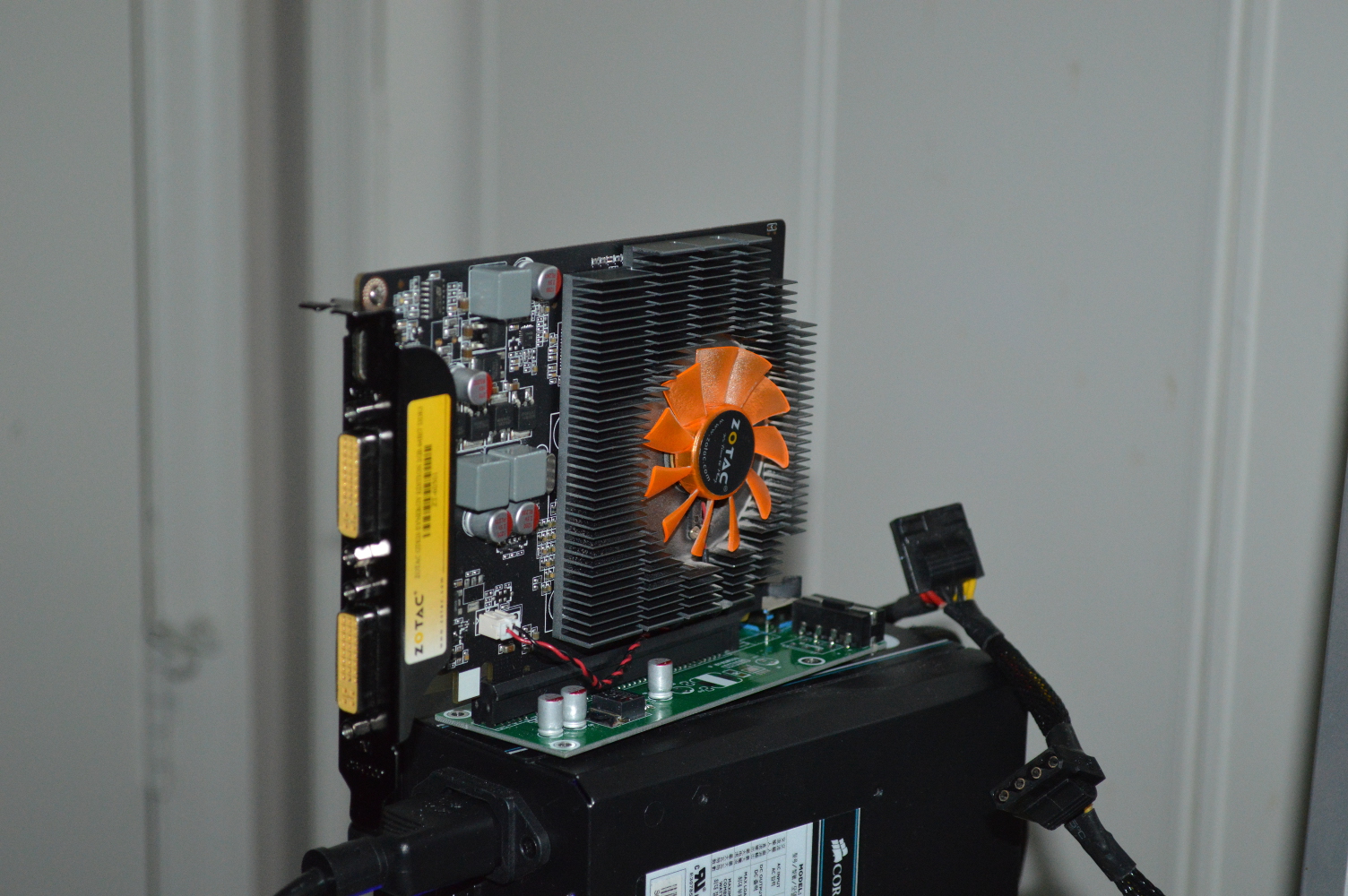

So in getting the proof of concept with the GTX 660, I connected the GT 620 to it as well to get an idea of whether I could run the two simultaneously — given the age of the mainboard — and what the BOINC client would do with them. Would it run the GPUs only and not bother with any CPU processing?

Not quite. It took a little bit for the client to realize that it could run CPU tasks on one of the cores, with the second core being used to coordinate with the graphics cards. Both cards were able to process at expected rates. The GT 620 processed at 18 GFLOPS and the GTX 660 was staying north of 45 GFLOPs. No bottlenecks with either card.

Now three GPUs… not sure how BOINC would handle that with a dual core processor, and I’m not going to try to find out either at this point. The GT 620 is slated for the chassis that will house the FX-8370E.

The GTX 660s will go into a 4U chassis with 14 expansion slots so there is room to grow, and, as previously mentioned, I may water cool them to make use of the blocks I still have while also keeping their temperatures lower.

Next step…

So far everything has been working quite well when it comes to the experiments. I’m pleased with the results, pleased that everything is working as expected, and pleased with the performance I’m getting.

The next steps, though, will move this project in the direction of building this into a rack starting with the cabinet. I have the 20U rails, so it’s now a matter of buying the lumber and necessary hardware and building it.

Additional proof of concepts are needed as well. I need to acquire a couple sets of panel mount USB 3.0 cables (mentioned above) and test them as if that was between the graphics cards and the main system and make sure everything will continue to work as expected. I don’t have any reason to think they won’t work, but I’d rather be safe than sorry on that. If all goes well, then I’ll be acquiring chassis, likely starting with the aforementioned 4U chassis for the graphics cards.