For the last couple years, I’ve more or less tried to use older hardware to donate compute time to various research projects. To varying degrees of success. Mostly these have been for the Berkeley projects with occasional time allotted to Folding@Home.

Specifically the GPUs I have for this purpose:

- 2 x PNY GTX 770 4GB OC

- 1 x EVGA GTX 680 SC 2GB

- 1 x Zotac GTX 680 Amp!

- 2 x EVGA GTX 660 SC 2GB

- 1 x XFX “Double-D” R9 290X 4GB

And all of the aforementioned cards have water blocks on them as well, allowing them to run at very good temperatures typically hovering around 40C for the NVIDIA cards, and nearer to 50C for the AMD card. Basically perfect for cards not designed to be under near constant load 24/7 topping out in the 70sC if not 80sC on their cores.

The weakest cards are, obviously, the GTX 660s. So with the latest Pascal generation from NVIDIA, and the performance metrics thereof, I decided it was time to retire the GTX 660s from the cluster.

In its place? A single GTX 1060 3GB Mini from Zotac. This single card is now the shining star in the cluster, outperforming all of the others. While it has less CUDA cores compared to the GTX 680, they are clocked much better — able to boost to around 1.7GHz compared to shy of 1.1GHz for the GTX 680 — allowing for much better performance in a much smaller package.

The short GTX 1060 cards have the major advantage of being able to fit into a smaller form factor. Such as a 2U chassis on a riser card. Provided you get the right chassis. But the constricted fit had me wondering whether the card would thermal throttle under load since there was basically only 1 slot’s worth of room before the mainboard.

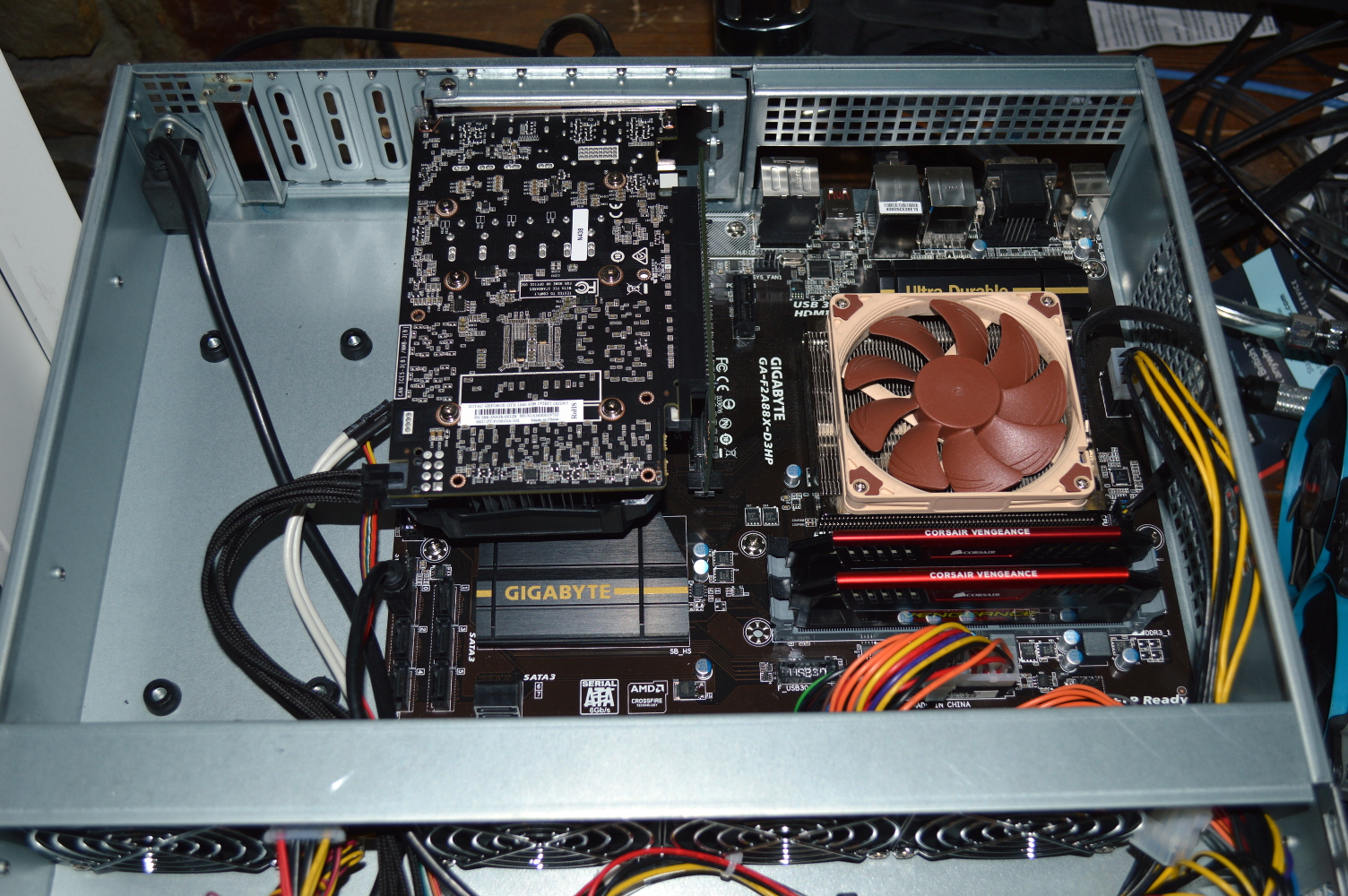

Specifications

- CPU: AMD A8-7600k

- Cooling: Noctua NH-L9a

- RAM: 8GB DDR3-1833 RAM

- Mainboard: Gigabyte F2A88X-D3HP

- Chassis: PlinkUSA IPC-2026

- Power supply: Antec BP350

So let’s get into specs and the philosophy behind the choices.

Processor. Initially I considered an Athlon 64 X2 for this node. The reasoning was simple: the node will be used for only GPU processing, specifically for Berkeley tasks since those do not use the CPU much. So there’s not really much reason to use a system more powerful processor.

Indeed if you’re putting together a mining rig, these older CPUs are well-suited, even with multiple graphics cards, since you don’t need a fast CPU for that purpose. Heck there are Bitcoin mining rigs that consist of multiple USB ASIC miners connected to Raspberry Pi boards.

But, alas, I wanted to run Linux on this and could not get the BOINC Linux client to detect the graphics card with the latest NVIDIA driver — 384.59 as of when I write this. Even downgrading the driver didn’t help. No idea what was going on, so I swapped over to a processor that I knew could run Windows 10 – the A8-7600.

On that mark, the AM1 boards and processors would work well for this use case since you don’t need a fast processor for BOINC. For Folding@Home, it helps to have it since Folding@Home does rely on the CPU a bit for its GPU tasks.

CPU cooler. The Noctua NH-L9a is a very capable, yet quiet CPU cooler. I highly recommend it to anyone building a small form factor system. If you want to use this with the Ryzen chips, you’ll need the separate AM4 mount kit. Under the cooler I use Arctic MX-4, which is the best thermal compound on the market currently.

Chassis. Initially I considered the IPC-G252S also from PlinkUSA, mainly because I’ve used it before. But the configuration of the chassis doesn’t allow for graphics cards with power connectors. Or at least with power connectors coming out the top. If this was a GTX 1050 or GTX 1050 Ti, then it’d be a perfect fit. But the top of the card will be too near the wall to access the power connector. Even low-profile 90-degree PCI-Express connectors won’t fit.

Hence the IPC-2026, which has a lot more room for that capability. By putting the power supply in the front. Plus the wall of 80mm fans provides all the ventilation the card will need as it’ll have almost two of them blowing directly onto it. After I swapped them out for much quieter fans. And at just 70 USD plus shipping, it was the perfect choice.

Then the question was the power supply.

The chassis can fit a full-size ATX power supply up to 160mm. And it has a ventilation grill on the lid so you can use a bottom-fanned PSU. But given this could end up in a rack with that grill covered up, I opted for the Antec BP350, which is a 140mm ATX power supply with a single 80mm fan at the rear to pull air through the opposite side. No other ventilation is on the PSU’s cover.

Since the PSU has only a 4-pin CPU connector, I needed to buy a P4+LP4 to 8-pin CPU harness. And for good measure, I added an 8-pin CPU extension cable to the mix.

350W is overkill for this, as I don’t expect this node to draw more than 150W to 200W continuously. It’s not 80+ rated, but at that low of a wattage draw, there’s no need for it to be.

A future node I’m now considering will actually be in another IPC-2026 chassis, but using the R9 290X that came from Absinthe. And keeping it water cooled. So look forward to that coming down the pike. For now I’ve got a few other things on the agenda. I’ll also be pulling off the Zotac’s stock cooler and replacing it with a universal VGA block and finding some way to cool the VRMs and RAM.

* * * * *