- Nasira – A FreeNAS build

- Nasira – Part II

- Nasira – Part III

- Nasira with a little SAS

- Moving Nasira to a different chassis

- When a drive dies

- Adding an SLOG… or not

- Coming full circle

- Throwing a short pass

- More upgrades but… no more NVMe

- Check your mainboard battery

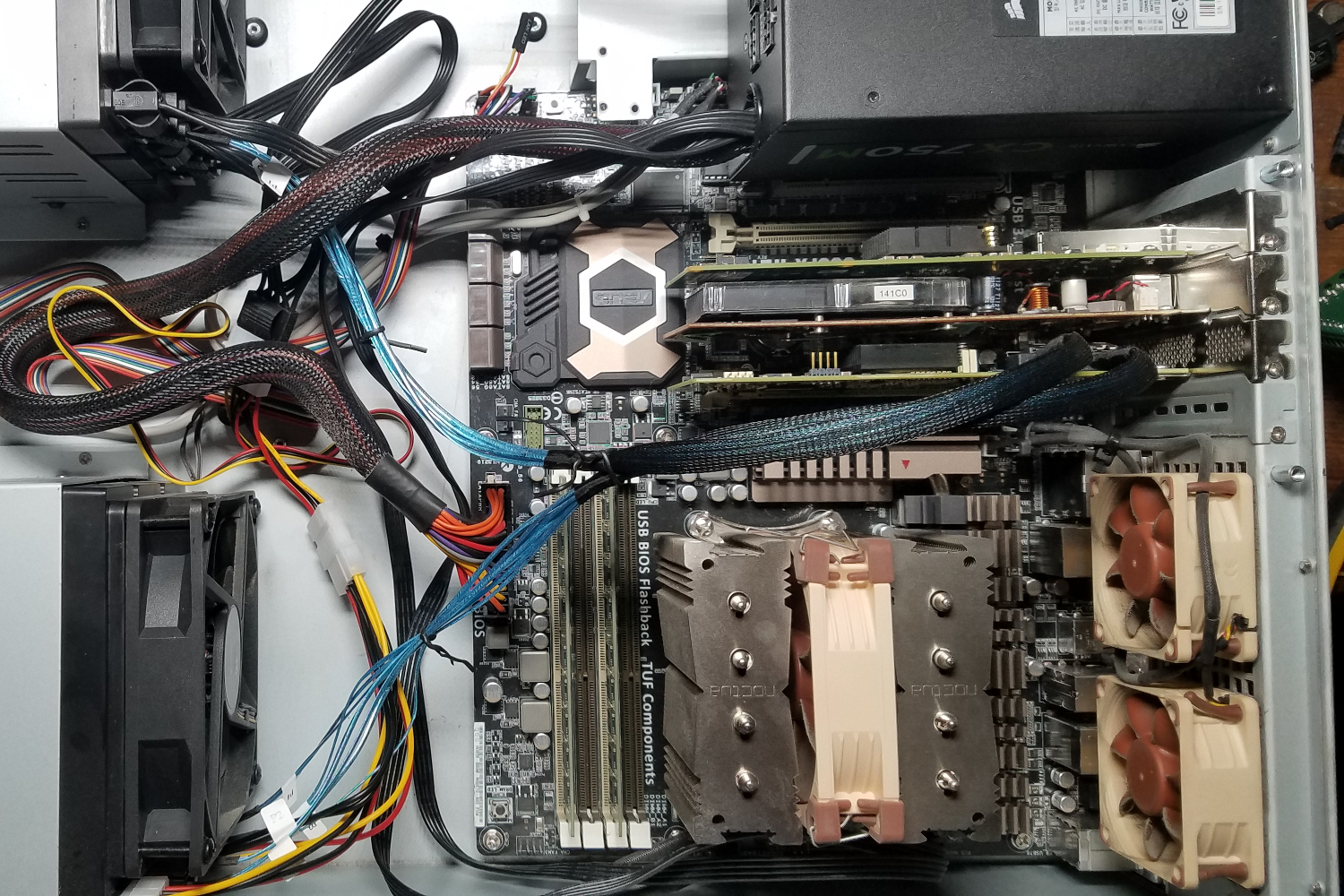

So I’ve had Nasira for a little over two years now. Here are the current specifications:

- CPU: AMD FX-8350 with Noctua NH-D9L

- Mainboard: ASUS Sabertooth 990FX R2.0

- GPU: Zotac GT620

- RAM: 16GB ECC DDR3

- NIC: Chelsio S320E dual-SFP+ 10GbE

- Power: Corsair CX750M

- HDDs: 4x4TB, 2x6TB in three pairs

- Chassis: PlinkUSA IPC-G3350

- Hot swap bays: Rosewill RSV-SATA-Cage34

And it’s running FreeNAS. Which is currently booting off a 16GB SanDisk Fit USB2.0 drive. Despite some articles I’ve read saying there isn’t a problem doing this, along with the FreeNAS installation saying it’s preferred, I can’t really recommend it. I’ve had intermittent complete lockups as a result. And I mean hard lockups where I have to pull the USB drive and reinsert it for the system to boot from it again.

Given SanDisk’s reputation regarding storage, this isn’t a case of using a bad USB drive or one from a lesser-reputable brand. And I used that specific USB drive on recommendation of someone else.

So I want to have the NAS boot from an SSD.

Now I could just put an SSD in one of the free trays in the hot-swap bay and call it a day – I have 8 bays, but only 6 HDDs. But I’m also filling out the last two trays. So connecting the HDDs to a controller card would be better, opening up the onboard connections.

And I have a controller card. But not one you’d want to use with a NAS. Plus it wouldn’t reduce the cable bulk either. So what did I go far? IBM M1015, of course, which I found on eBay for a little under 60 USD. Since that’s what everyone seems to use unless they’re using an LSI-branded card. But there’s a… complication.

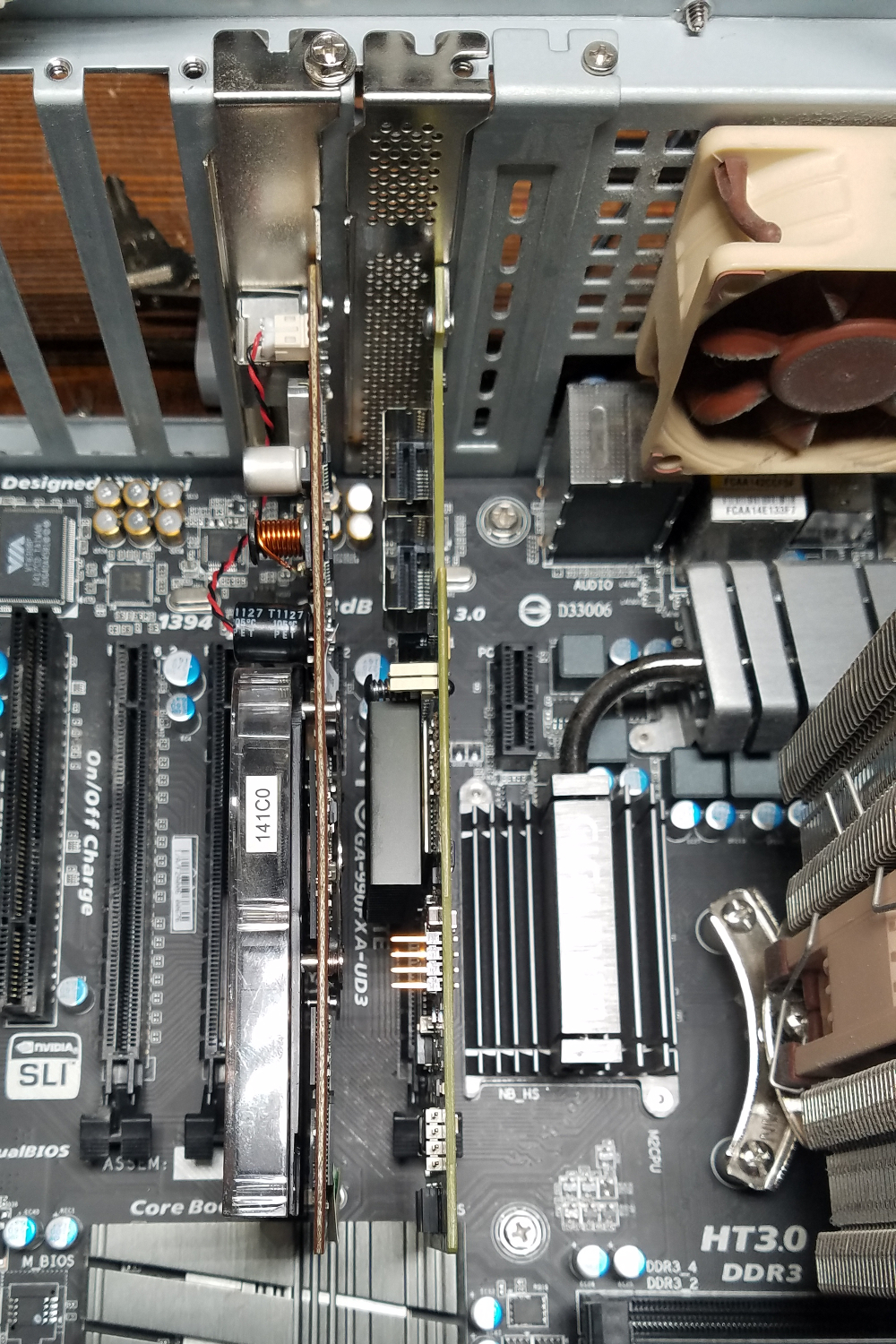

The mainboard has 6 expansion slots, three of which are hidden under the power supply – it’s a 3U chassis, not a 4U. The exposed slots are two full-length slots (one x16, one x4), and a x1 slot. The full-length slots are currently occupied by the 10GbE card and graphics card (Zotac GT620).

The SAS card is x8. So the only way I can get the SAS controller and the 10GbE card is to use a x1 graphics card. Yes, those exist. YouTube channel RandomGaminginHD recently covered one called the Asus Neon:

And I considered the Zotac GT710 x1 card to get something recent and passively cooled, but didn’t really want to spend $40 on a video card for a server (eBay prices used and new were similar to Amazon’s price new).

Searching eBay a little more, I found an ATI FireMV 2250 with a x1 interface sporting a mind-blowing 256MB DDR2. Released in 2007, it was never made for gaming. It was advertised as a 2D card, making it a good fit for the NAS and other servers where you’re using 10GbE cards or SAS controllers in the higher-lane slots.

The card has a DPS-59 connector, not a standard DVI or VGA connector. But a DPS-59 to dual-VGA splitter was only 8 USD on Amazon, so only a minor setback.

I set up the hardware in a test system to make sure the cards work. And also to flash the SAS card from a RAID card to an HBA card. Which seemed way more involved than it needed to be. In large part because I needed to create a UEFI bootable device. These steps got it running on a Gigabyte 990FXA-UD3 mainboard: How-to: Flash LSI 9211-8i using EFI shell. Only change to the steps: the board would not boot the UEFI shell unless it was named “bootx64.efi” on the drive, instead of “shellx64.efi” as the instructions state. And at the end, you also need to reassign the SAS address to the card:

sas2flash.efi -o -sasadd [address from green sticker (no spaces)]This may or may not be absolutely necessary, but it keeps you from getting the “SAS Address not programmed on controller” message during boot. So along with the mini-SAS to SATA splitters and 32GB SSD, I took Nasira offline for maintenance (oh God, the dust!!!) and the hardware swaps. Didn’t bother trying to get ALL of the dust out of the system since next year I’ll likely be moving it all into a 4U chassis. More on that later.

Things are a little crowded around the available expansion slots. But with an 80mm fan blowing on them, they’re not going to have too much of an issue keeping cool. LSI cards are known to run hot, and this one is no different. I attempted to remove the heatsink, but I think it’s attached with thermal adhesive, not thermal compound.

I do intend to replace the 80mm fan with a higher-performance fan when I get the chance. Or figure out how to stand up a 120mm fan in its place to have the airflow without the noise. Which is the better option, now that I think about it.

And as predicted, the cables are much, much cleaner compared to before. A hell of a lot cleaner compared to having a bundle of eight (8) SATA cables going between SATA ports. Sure there’s still the nest between the drive bays, but at least it isn’t complicated by SATA cables.

I also took this as a chance to replace the 10GbE card as well. From a Chelsio S320 to a Mellanox Connect-X2. The Chelsio is a PCI-E 1.0 card, while the Mellanox is a 2.0 card. So this allowed me to move the 10GbE card to the x4 slot and put the SAS card in the x16 slot nearest the CPU. FreeNAS 9.x did not support Mellanox chipsets, whereas FreeNAS 11 does.

I reinstalled FreeNAS to the boot SSD – 11.1-U5 is the latest as of when I write this. My previous installation was an 11.1 in-place upgrade over a 9.x installation. While it worked well and I never experienced any issues, I’ve typically frowned against doing in-place upgrades on operating systems. I always recommend against it with Windows. It can work well with some Linux distros – one of the reasons I’ve gravitated toward Fedora – and be problematic with others.

Importing the exported FreeNAS configuration, though, did not work. Likely because it was applying a configuration for the now non-existent Chelsio card. But I was able to look at the exported SQLite database to figure out what I had and reproduce it – shares and users were my only concern. Thankfully the database layout is relatively straightforward.

Added storage

Now here is where things got a little tricky.

I mentioned previously that I had six (6) HDDs in three pairs: two 4TB pairs and one 6TB pair. And I said the 6TB pair was a WD Red at 5400RPM and a Seagate IronWolf at 7200RPM. Had I realized the RPM difference when I ordered them, I likely would’ve gone with an HGST NAS drive instead. So now is the opportunity to correct that deficiency.

The other Seagate 4TB NAS drives are 5900RPM drives compared to the 5400RPM on the WD Reds. But that isn’t nearly as much a difference as 7200RPM to 5400RPM – under 10% higher compared to 1/3rd higher, respectively.

Replacing the disk was straightforward – didn’t capture any screenshots since this happened after 2am.

- Take the Seagate IronWolf 6TB drive offline

- Prep the new WD Red 6TB drive into a sled

- Pull the Seagate drive from the hot swap bay and set it aside

- Slide the WD Red drive into the same slot the Seagate drive previously occupied

- “Replace” the Seagate drive with the WD Red drive in FreeNAS.

The resilver took a little over 5 hours. I’ve said before, both herein and elsewhere, that resilvering times are the reason to use mirrored pairs over RAID-Zx. And it’s the leading expert opinion from what I could find as well. And always remember that even this is not a replacement for a good backup plan.

The next morning, I connected the Seagate drive to my computer – Mira – using a SATA to USB3.0 adapter to I could wipe the partition table. And I prepped the second Seagate IronWolf drive into a sled. Slid both drives into the hot swap bays. And expanded the volume with a new 6TB mirrored pair.

40TB of HDDs split down into 20TB from mirrored pairs. Effective space is about 18.1TiB with overhead – 20TB = 20 Trillion bytes = 18.19TiB – with about 8.7TiB free space available.

Future plans

8TiB is a lot of space to fill. It took two years to get to this point with how my wife and I do things. Sure the extra available space means we’ll be tempted to fill it – a “windfall” of storage space, so to speak. And with how I store movies, television episodes, and music on the NAS, that space could fill faster than expected.

Plus it is often advised to not let the pool’s available space fill up to less than 20% free where possible to avoid performance degradation. Prior to the expansion, I was approaching this – under 4TiB free out of ~13TiB space. So I expanded the storage with another pair of 6TB drives to back away from that 20%. That and to pair the 7200RPM with another 7200RPM drive.

So at the new capacity, I have basically until I get to about 3TiB before I start experiencing any ZFS performance degradation. The equivalent of nearly doubling my current movie library.

So let’s say, hypothetically, I needed to add more storage space. What would be the best way to do that now that all eight of the drive bays are filled? There are a few ways to go about it.

Option #1 is to start replacing the 4TB drives. Buying a 6TB or 8TB drive pair, and doing a replace and resilver on the first 4TB pair. Except that would add only 2TB or 4TB of free space. Not exactly a significant gain given the total capacity of the current pool. While this being an option is a benefit of using mirrored pairs over RAID-Zx, it isn’t exactly a practical benefit.

Especially since the 4TB drives are likely to last a very long time before I have to worry about any failures – whether unrecoverable read errors or the drive just flat out giving up the ghost. The drives are under such low usage that I probably could’ve used WD Blues or similar desktop drives in the NAS and been fine (and saved a bit of money). The only reason to pull the 4TB drives entirely would be repurposing them.

So option #2. Mileage may vary on this one. The 990FX mainboard in the NAS has an eSATA port on the rear – disabled currently. If I need to buy another pair of HDDs — e.g. 8TB – then I could connect an eSATA external enclosure to the rear. Definitely not something I’d want to leave long-term, though.

So what’s the better long-term option?

I mentioned previously about moving Nasira into a 4U chassis sometime next year. Possibly before the end of the year depending on what my needs become. Three of the expansion slots on the mainboard are blocked: a second x4 and x16 slot, and a PCI slot. The 990FX chipset provides 42 PCI-E lanes.

Getting more storage on the same system requires a second SAS card. Getting a second SAS card and keeping the 10GbE requires exposing those other slots. Exposing those slots requires moving everything into a 4U chassis. Along with needing an open slot for an SFF-8088 to SFF-8087 converter board. Then use a rack SAS expander to hold all the new drives and any additional ones that get added along the way.

Or buy a large enough SAS expander to hold the drives I currently have plus room to expand, then move the base system into a 2U chassis with a low profile cooler and low-profile brackets on the cards.

I’ll figure out what to do if the time comes.

You must be logged in to post a comment.