- 10 gigabit (10Gb) home network – Part I

- 10 gigabit (10Gb) home network – Part II

- Again, Amazon?

- 10 gigabit (10Gb) home network – Zone 1 switch

- 10 gigabit (10Gb) home network – Zone 2 switch – Part 1

- 10 gigabit (10Gb) home network – Zone 2 switch – Part 2

- 10Gb home network – Retrospective

- Quanta LB6M

- 10 gigabit home network – Summary

- Revisiting the Quanta LB6M

- MikroTik CRS317 10GbE switch

- MikroTik CSS610

- Quieting the MikroTik CRS317

- Goodbye, MikroTik

- Troubleshooting 2.5Gb power over Ethernet

Last updated: May 27, 2019

If you want to bring 10GbE into your home network, and keep it on a low budget, you really don’t need all that much.

First question to ask: how many computers are you connecting together? If you’re wanting to connect just two systems, you need just two network interface cards and a cable to connect them. Any more than that and you’ll need a switch.

Network interface cards (NICs)

eBay is where you’ll find the NICs for very cheap. Most of the surplus cards available are Mellanox. But you need to be a little careful about what cards you buy. Some part numbers may give you trouble, as these are rebranded cards even though they have the Mellanox chipset. Stick to Mellanox part numbers where you can.

For single-port Mellanox 10GbE cards, look for part number MNPA19-XTR. I’ve had good luck with Part No. 81Y1541, which is an IBM rebrand, I believe.

Cables and transceivers

You basically have just two options here: direct-attached copper and optical fiber. While most videos and articles on this push you toward direct-attached copper (and some eBay listings for NICs include one), consider optical fiber instead. It’s just better in many regards. And if you want to connect systems that are more than 10m apart (by cable distance, not linear distance), it’s pretty much your only option.

You’ll need 10GBase-SR transceivers, two for each cable. You can find these on eBay as well, and some SFP+ cards listed may come with one already. These transceivers use LC-to-LC duplex optical fiber. I’ll provide parts options below.

Switch

A very inexpensive (about 150 to 200 USD, depending on seller), quiet option for small setups is the MikroTik CRS305-1G-4S+IN, which has 4 SFP+ 10GbE ports and a GbE RJ45 uplink. It also supports GbE SFP modules for combining GbE and 10GbE.

If you don’t mind spending a little more money, MikroTik has a 16-port 10GbE SFP+ switch that supports 1GbE SFP modules: CRS317-1G-16S+RM. Which is a great option for combining 10GbE and GbE connections in one backbone using RJ45 SFP GbE modules.

An in-between option is the MikroTik CRS309-1G-8S-IN, which is an 8-port SFP+ switch with a GbE RJ45 port that can serve as an uplink.

I previously used a Quanta LB6M. You can find it for as little as 250 USD depending on seller. The only downside is the LB6M doesn’t make combining GbE and 10GbE in one backbone easy. And the stock fans on it are LOUD. And replacing them with quieter fans means the switch will run much hotter than normal due to lack of airflow.

In January 2019 I switched over to a MikroTik CRS317.

Now if you insist on going RJ45 for your 10GbE network, MikroTik has recently introduced a 10-port RJ45 10GbE switch that retails for less than Netgear’s least-expensive 8-port option: CRS312. Note, however, that RJ45 10GbE cards are currently still more expensive than SFP+ cards.

SFP vs SFP+

When purchasing switches and modules for your network, you need to be mindful of the difference between SFP and SFP+.

SFP is for Gigabit Ethernet connections only. This means if you buy a switch with SFP cages on it, those SFP cages will not deliver faster than Gigabit speeds.

SFP+ is required for 10 Gigabit Ethernet. This means for 10GbE, you need an SFP+ switch, SFP+ modules, and SFP+ transceivers.

So if you buy a switch with mostly SFP cages on it, such as the MikroTik CRS328, expecting to build a 10GbE network, you’re going to be very disappointed.

My setup

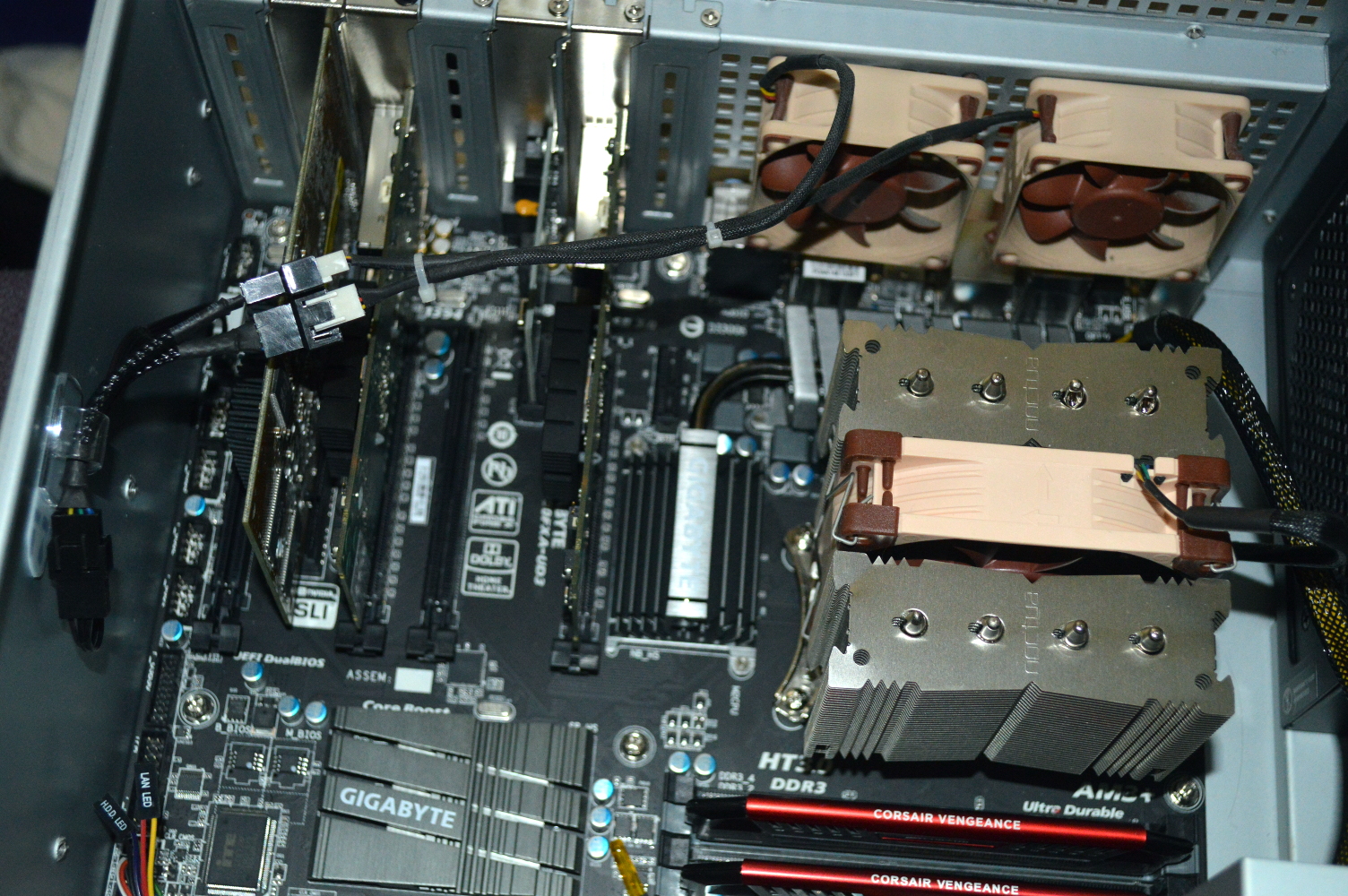

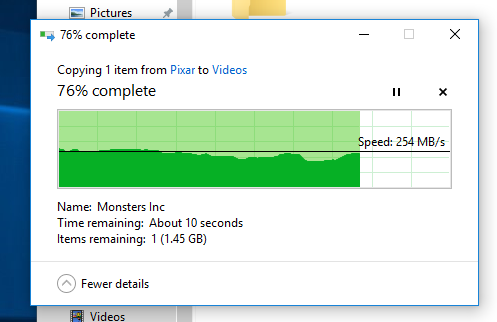

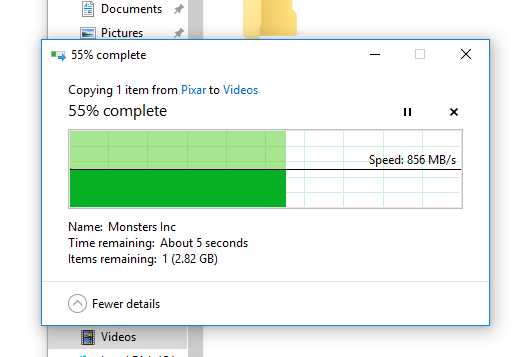

I have four systems connected to a 10GbE network: Absinthe, Mira, Nasira, and my dual-Opteron virtualization server.

- NICs: Mellanox ConnectX-2 MNPA19-XTR

- Switch: MikroTik CRS317

- Transceivers/cables: Fiberstore 10GBase-SR (Generic), LC-to-LC OM4 optical fiber

I use 30m cables to connect Absinthe and Mira to the switch. Nasira and the virtualization server use only 1m cables.

Purchase options

- Mellanox MNPA19-XTR: eBay, Amazon, Server Supply

- Quanta LB6M: eBay, Amazon, NewEgg

- MikroTik CRS305: eBay, Amazon, EuroDK

- MikroTik CRS309: eBay, Amazon, EuroDK

- MikroTik CRS317: eBay, Amazon, EuroDK

- MikroTik CRS312 (RJ45 switch): eBay, Amazon, EuroDK

- 10GBase-SR transceivers: eBay, FiberStore, Amazon

- LC-to-LC optical fiber: FiberStore, Monoprice

If you have any questions about parts or 10GbE in general, leave a comment below.

You must be logged in to post a comment.