When one person defaults on their debt, it isn’t the end of the financial world. But as we saw in 2008, when a lot of people default on their debt, it can bring down entire industries. When a lot of people defaulted on their mortgages, it created a crisis in the housing market from which we still haven’t recovered — and has led some economists to actually suggest that we need another housing bubble like what predicated the 2008 recession.

So what would happen if a comparable number of people defaulted on their student loans?

Bear in mind that the number of people who defaulted on their mortgages was not a significant percentage of the entire population of outstanding mortgage notes. But it was large enough. And if many do what Lee Siegel has done, chaos just might be the result.

Most do not know how loans and our monetary system work. If they did, they wouldn’t think it conceivable to not pay back their debts unless they just flat out had the inability to do so. Not only do you have a moral duty to pay back your debts, it’s a legal duty as well secured by a contract. So let’s get into what Siegel wrote in his New York Times piece called “Why I Defaulted on My Student Loans“.

His story is not uncommon: taking on massive loans to attend a private college before transferring to a lesser expensive public college closer to home. After college, he was left with massive debt for which repayment is now being demanded.

I could give up what had become my vocation (in my case, being a writer) and take a job that I didn’t want in order to repay the huge debt I had accumulated in college and graduate school. Or I could take what I had been led to believe was both the morally and legally reprehensible step of defaulting on my student loans, which was the only way I could survive without wasting my life in a job that had nothing to do with my particular usefulness to society.

I chose life. That is to say, I defaulted on my student loans.

While default just means you are no longer paying within the bounds of what is required by the contract, it is clear in the remainder of the article that Siegel just stopped paying altogether. So rather than trying to do what many other writers have done and do his writing on the side while trying to make a living enough to repay his debts, he chose to just not repay his debt.

The one thing rather intriguing about Siegel as well: he’s almost as old as my parents. He’s not some guy in his 20s to early 30s who just decided he’s had enough with his student loans and is refusing to pay them, and his words give the indication he never put a dime toward them.

Having opened a new life to me beyond my modest origins, the education system was now going to call in its chits and prevent me from pursuing that new life, simply because I had the misfortune of coming from modest origins.

And here we start to see the entitlement mentality whose origins is actually when Siegel was in college. These are the trains of thought that included being entitled to a free post-secondary education. And he is exercising that mentality by evading his student loans. He readily admits to being a deadbeat and acknowledges the consequences his default may be having on his credit. Yet I can’t help but find his attitude disgusting.

Or maybe, after going back to school, I should have gone into finance, or some other lucrative career. Self-disgust and lifelong unhappiness, destroying a precious young life — all this is a small price to pay for meeting your student loan obligations.

We can’t all get what we want, and when we have obligations to fill, sometimes we just need to bite the bullet, acknowledge our standing, and do what is necessary.

In The Simpsons, for example, Homer walked away from his job at the nuclear plant to take a job at a bowling alley. When Marge becomes unexpectedly pregnant with Maggie, Homer at first did what he could to make the bowling alley more profitable so he could get a raise. It didn’t exactly work, so Homer knew what he had to do, even if he didn’t want to do it: he went back to the nuclear plant to see if he could get his job back. And the next 20 years following that episode is history.

Yet Siegel chose to default on his debt because trying to repay it meant taking an employment path he wouldn’t want. He easily could’ve been writing on the side while taking some kind of worthwhile job to repay his debts and trying to at least make some kind of living until writing could be his living. After all, that’s what Stephen King did.

Sure he’s not Stephen King. And neither am I. I highly doubt this blog will lead to a living as a writer — especially since I tend to get a little long winded when I write (no doubt, herein as well). But the question needs to be asked: what if writing never panned out for him? What if he was never able to make it as a writer? Well, for one, I wouldn’t have the subject matter for this article — what a great loss to society that would’ve been — but at the same time, would he have swallowed his pride and found some kind of worthwhile work to pay his debts and support his family?

Or would he have wallowed in the pools of his shattered dreams and lived a mediocre existence trying to figure out why it just didn’t work out while still refusing to pay back his debts because he didn’t get the career he wanted?

Let me guess that you, dear reader, probably think this is a bit of a stretch to be implying. If that is your thought, re-read the above quote and read his entire article if you haven’t yet.

And he spouts off against the economically privileged as well despite living in a pretty well-off part of the country. If Wikipedia is accurate, he lives in Montclair, New Jersey, which has a median income higher than the State median, and a housing value that is significantly greater than the State median. Given that, I really hope his wife is the one who handles the household finances.

When the fateful day comes, and your credit looks like a war zone, don’t be afraid. The reported consequences of having no credit are scare talk, to some extent. The reliably predatory nature of American life guarantees that there will always be somebody to help you, from credit card companies charging stratospheric interest rates to subprime loans for houses and cars. Our economic system ensures that so long as you are willing to sink deeper and deeper into debt, you will keep being enthusiastically invited to play the economic game.

Yes because where there is demand there will be supply. There are many loans that are issued that never should have been — of which the loans this guy took out to go to school are easily on the list. But to call all of American life “predatory” is absurd.

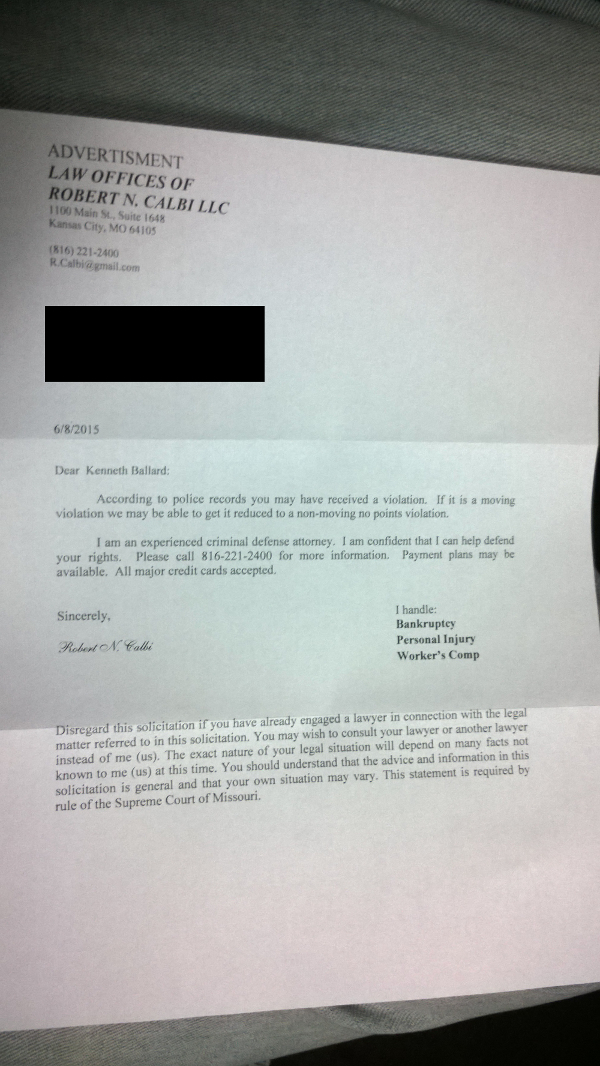

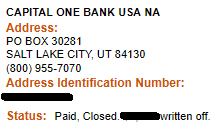

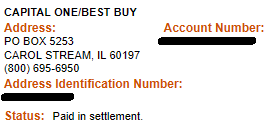

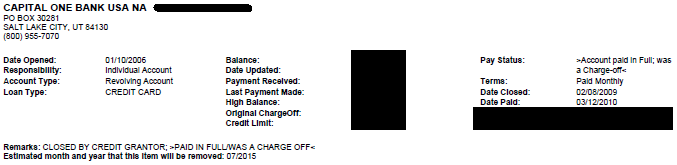

But the “reported consequences” of having no credit or destroyed credit aren’t “scare talk” to any extent. They are very well documented. For instance I’ve paid off many previous debts, including my student loans, yet I was recently viewed as a deadbeat simply because all of the defaulted and charged off debt, all of which is paid off, hasn’t fallen off my credit report yet. The consequences associated with the possibility of obtaining future credit are also very well documented.

Here’s one consequence of that student loan that Siegel may not have considered: the government may put a claim against his estate after death. They could take everything bearing his name and put it on the auction block to recover what they believe they are owed, and can probably produce the promissory notes backing up what they want to do. If the online court system for Essex County, NJ, wasn’t down for maintenance as of when I write this, I’d try to see if there have been any lawsuits against him and any default judgments regarding his defaulted loans.

From here, though, we see that Siegel actually has higher motives in mind in acting self-righteous about his defaults.

I am sharply aware of the strongest objection to my lapse into default. If everyone acted as I did, chaos would result. The entire structure of American higher education would change.

Actually, so long as banks or the government were writing loans that paid for post-secondary education in this country, it wouldn’t change. It wouldn’t have any incentive to do so. What it would do is starve the loan servicers of the money they would need to stay afloat and fund additional loans — not just student loans, mind you. This, in turn, would cause post-secondary education in the United States to basically collapse, as the Federal and various State governments would also be starved of tax dollars from all the people put out of work from the collapse of one segment of the financial sector.

The government would get out of the loan-making and the loan-enforcement business. Congress might even explore a special, universal education tax that would make higher education affordable.

And the government should get out of the loan business entirely. The only involvement should be through Courts in enforcing contracts — like the contract that Siegel signed in the first place. Certainly the easy availability of funds for post-secondary education has put us in a precarious situation with regard to post-secondary education in the United States. And many have looked to Europe as a means of salvation. Perhaps we can mimic them, provide a free post-secondary education to all students, like Europe? Like the Europe that is currently not far from eating itself alive from the inflation they are experiencing caused by debt crises in EU member nations.

What we really need to do is get away from the “a 4-year degree is absolutely necessary” and ask the question of whether post-secondary education is absolutely necessary. Like what I have done here on this blog, and what Mike Rowe does through his organization.

Instead of guaranteeing loans, the government would have to guarantee a college education. There are a lot of people who could learn to live with that, too.

The government, in actuality, shouldn’t be guaranteeing either. Again this shows that Siegel is now speaking to a higher agenda regarding why he defaulted on his student loans. He’s taking his own personal protest and trying to turn it into a national pattern, which will not be good for the economy or the financial sector — regardless of what you think about it, and about the bank, if they sink so does our entire nation, and it won’t be pretty.

And then there’s the message many are starting to believe, one which is being espoused by plenty of people, and implied in Siegel’s article: I don’t have to repay my student loans if I don’t get the career I want. And that is a dangerous message indeed, because it places the burden on loan writers and colleges to not only guarantee an education, but guarantee a future career for the students.

And, again, many students are already believing that is what should be happening.

You must be logged in to post a comment.