Your ISP oversells your data rate.

I’ll just say that up front. I’m privileged to have Google Fiber, which is a 1 gigabit per second, full duplex, Internet connection. However if I go to run a speed test, I won’t see that full 1 gigabit. During off-peak hours, my speed will be north of 900 Mb/s both ways, but typically it’s a bit lower than that.

Your actual Internet connection is dependent upon many factors. Again, bear in mind that your ISP oversells your bandwidth plan, and I’ll demonstrate not only why that happens, but why it’s unavoidable, and why it typically was never a problem.

* * * * *

Okay let’s start with why this happens. First, let’s talk about network topologies. Starting with a star network.

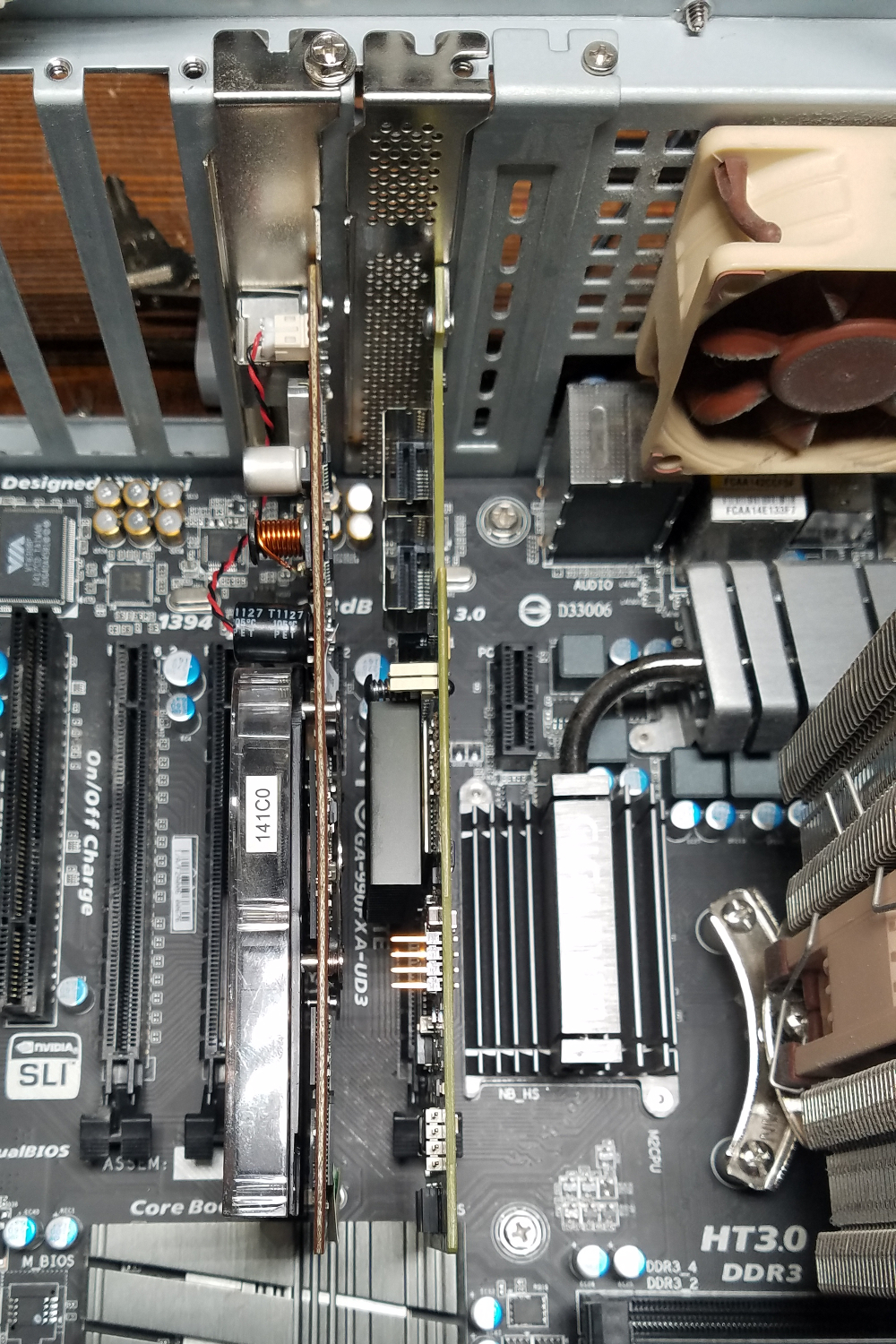

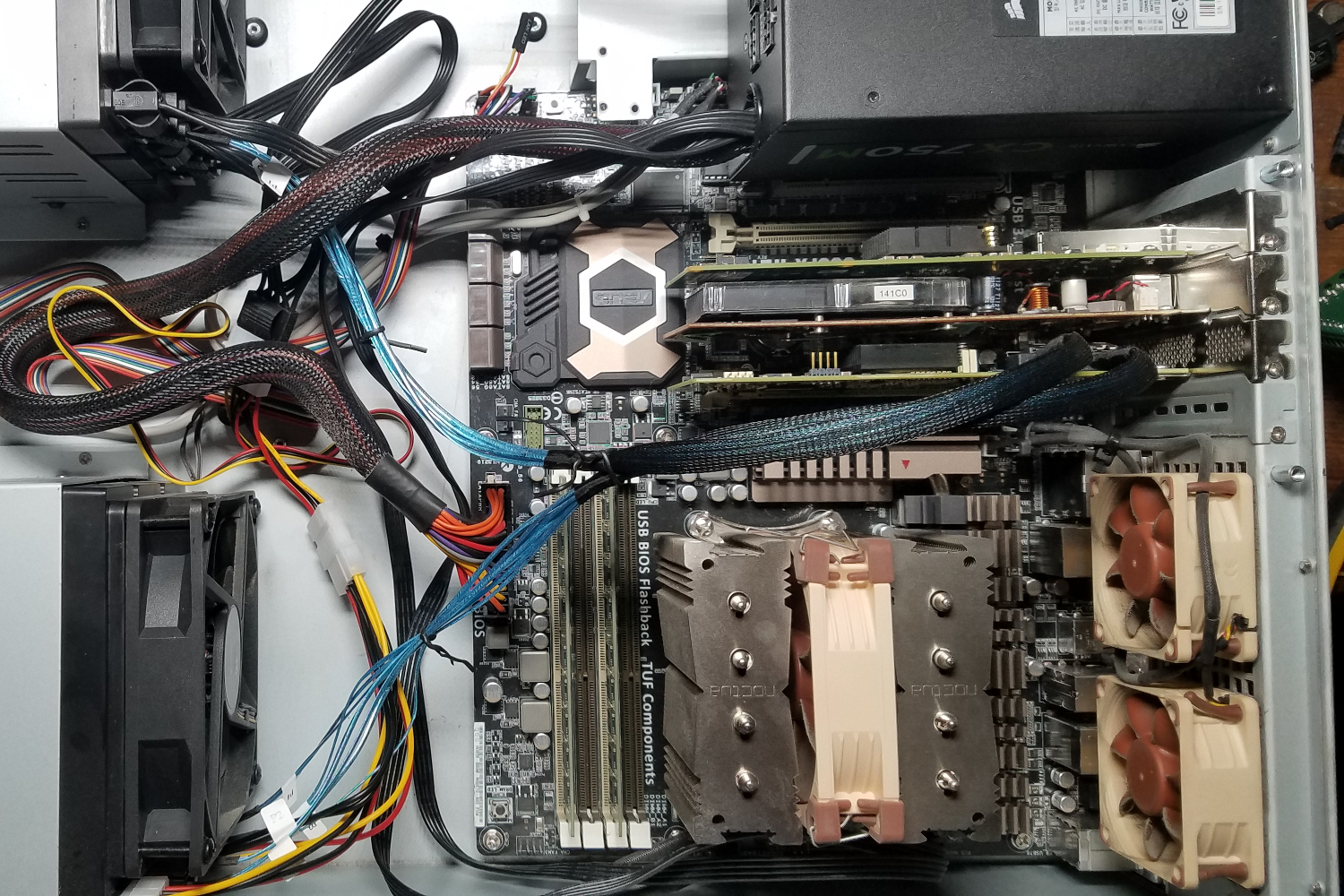

Missing from this image is the respective maximum bandwidth available to each device on the network. I’ll presume the switch is Gigabit since that is the most common switch used in business and home networks.

The desktops are (likely) all Gigabit capable, same with the server (for simplicity). For simplicity, I’ll assume the printer is a standard laser printer with only 100Mbs, also called Fast Ethernet. And this switch is going to be connected to the larger network and the Internet via an uplink, which is also Gigabit.

Now in most Gigabit switches, unless you buy a really cheap one, the maximum internal throughput will be about the same as all of the ports combined at full duplex (meaning same bandwidth for upload and download). This means if the switch has 8 ports, it’ll support a maximum throughput of 8Gbps, and 16Gbps if it has 16 ports, and so on. This is to make sure everyone can talk to everyone else at the maximum supported throughput.

But what about that uplink? The port that supports the uplink is no different from any other port. None of the devices on the switch will be able to talk to the rest of the network or the Internet faster than the uplink allows. This means they will be sharing bandwidth with the rest of the devices on the switch. This creates contention.

This is why there are Gigabit switches with one or more 10GbE ports intended to be the uplink to the rest of the network. These switches are typically used in enterprise and medium or large office networks since they are more expensive. They can also be connected to larger 10GbE switches to create a backbone. All with the intent of alleviating contention as much as possible on the internal network.

But no device on the network can talk faster than its connection will allow, regardless of how well you design the network. This means that if you have a file or database server that sees a lot of traffic during the day, no one can to talk to it at full bandwidth since it will be overwhelmed. There are ways to alleviate that contention, but you’re merely kicking the can down the road.

A well-designed network is one in which all devices on the network can access whatever resources they desire without significant delay through a combination of switches and routers. And making sure the resources that will see significant traffic have the most bandwidth available – e.g. multiple 10GbE connections trunked into one pipe, also known as “link aggregation“. Along with being integrated into the network in a way that maximizes throughput and minimizes contention.

* * * * *

So what does all of that have to do with the Internet and Net Neutrality? A lot. In large part because those most advocating for Net Neutrality don’t know why it was never going to actually work the way they desired.

Let’s rewind a bit to show how this problem came about. Twenty (20) years ago, dial-up was still very prevalent and broadband (DSL and cable) weren’t particularly common. Your ISP had a modem pool connected to a few servers that routed Internet connections through to the ISP’s trunk. The modems were very low bandwidth – 56kbps at most, with bandwidth varying based on distance and phone line quality – so ISPs didn’t need a lot of throughput.

That everyone was connecting through a phone line made ISP competition a no-brainer. It wasn’t unusual for a metropolitan area to have a few ISPs along with the phone company offering their own Internet service. Your Internet service wasn’t tied to those providing your phone line. Switching your ISP was as simple as changing the phone number and login details on your home computer.

Broadband and the “always on” Internet connection changed all of that. Now those who provide the connection to your house also provide the Internet service. And there is, unfortunately, no easy way to get away from that.

But the physical connection providing your Internet service is not much different from home phone service with regard to how it is provided in your locale. The line to your home leads to a junction box, which will combine your line along with several other lines into one larger trunk. Either through a higher bandwidth uplink, or through multiplexing – also called “muxing”, the opposite of which is “demuxing”. Multiplexing, by the way, is how audio and video are joined together into one stream of bits.

The signal may jump through additional junction boxes before making it to your ISP’s regional switching center. The fewer the jumps, the higher the bandwidth available since there isn’t nearly as much contention. Your home doesn’t have a direct line to the regional switching center. And as I’ll show in a little bit, it doesn’t need it either.

The switching center routes your connection to the Internet along with any regional services, similar to the uplink from your home network to the ISP. The connection between the regional switching center and your home is referred to as the “last mile”.

Here’s a question: does the regional switching center have enough bandwidth to provide maximum throughput to all the “last mile” lines?

* * * * *

As I said at the top, your ISP oversells your data rate. They do not have enough bandwidth available to provide every household with their maximum throughput 24 hours a day, 7 days a week.

Almost no one is using their maximum throughput 24 hours a day. Very, very, very few use even close to that. And a majority of households don’t use a majority of their available bandwidth. We overbuy because the ISP oversells. But that bandwidth does come in handy for those times you need it – such as when downloading a large game or updates for your mobile, computer, or game console.

But ISPs do not need enough bandwidth to provide every household with their full throughput around the clock. But there’s another reason why they won’t ever get to that level: idle hardware.

The hardware at the switching stations and junction boxes across the last mile are expensive to acquire, take up space, and consume power. So ISPs won’t acquire more hardware than they require to provide adequate service to their customers. The fact that most households don’t use most of their available bandwidth most of the time is what allows this to work.

Which means, then, that problems can arise when this no longer remains the case. Enter peer-to-peer networking.

* * * * *

Comcast outright blocking BitTorrent is an oft-cited example of why we “need” Net Neutrality. A lot of people do not know how BitTorrent works. Let me put it this way: it’s in the name.

Peer-to-peer protocols like BitTorrent are designed to maximize download speeds by saturating an Internet connection – hence the name, bit torrent. A few people using BitTorrent around the clock isn’t itself enough to create a problem, since ISPs have the bandwidth to allow for a few heavy users. When it becomes quite a bit more than just “a few”, however, their use affects everyone else on the ISP’s network.

Video streaming – e.g. Netflix, Hulu, YouTube – creates similar contention. Most video streaming protocols are designed to stream video up to either a client-selected maximum quality, or whatever the bandwidth will allow. Increasing video quality and resolution only created more contention across available bandwidth.

ISPs engage in “traffic shaping” to mitigate the problem. Traffic shaping is similar to another concept with which all network administrators and engineers are hopefully familiar: Quality of Service, or QoS. It is used to prioritize certain traffic over others – e.g. a business or enterprise will prioritize VoIP traffic and traffic to and from critical cloud-based services over other network traffic. It can also be used deprioritize, limit, or block certain traffic – e.g. businesses throttling YouTube or other video streaming services to avoid contention with critical services.

And that traffic shaping – blocking or limiting certain traffic – was necessary to avoid a few heavy users making it difficult for everyone else to use the Internet service they were paying for. Regulating certain services to ensure a relative few weren’t spoiling it for everyone else.

Yet somehow that detail is often lost in discussions on Net Neutrality. And misconceptions, misrepresentations, and misunderstandings lead to bad policy.

* * * * *

So let’s talk “fast lanes” for a moment.

There has been a lot of baseless speculation, fear mongering, and doomsday predictions around this concept, along with what could happen with Internet service in the United States should Net Neutrality be allowed to completely expire.

While “fast lanes” have been portrayed as ISPs trying to extort money from Netflix, there’s actually a much more benign motive here: getting Netflix and others to help upgrade the infrastructure needed to support their video streaming. Since it was the start of their streaming service, and the eventual consumption of it by millions of people, that led to massive degradation in Internet service for millions of other customers who weren’t streaming Netflix or much else.

As a means of alleviating traffic congestion, many metropolitan areas have built HOV lanes – “high-occupancy vehicle” lanes – to encourage carpooling. The degree to which this is successful being highly debatable. The “fast lane” concept for the Internet was similar. But when the idea was first mentioned, many took it to mean that ISPs were going to artificially throttle websites who don’t pay up, a presumption mirrored in the Net Neutrality policy at the FCC. What it actually means is providing a separate bandwidth route for bandwidth-intense applications. Specifically video streaming.

The reason for this comes down to the structure of the entire Internet. Which is one giant tree network. Recall from above about network design that devices and services that see the most traffic should be integrated into the larger network in a way that maximizes throughput to those services while minimizing contention and bottlenecks for everything else. This can be achieved in multiple ways.

“Fast lanes”, contrary to popular belief, were intended to divert the traffic for the most bandwidth intense services around everyone else. An HOV lane, of sorts. Yet it was portrayed as ISPs trying to extort money from websites by artificially throttling them unless they paid up, or even outright denying that website access from their regional networks.

Yeah, that’s not even close to what was going to happen.

* * * * *

The “fast lanes” were never implemented, though. So what gives? Does that mean the fearmongers were right and ISPs were planning to artificially throttle or block websites to extort money? Not even close. Instead the largest Internet-based companies put into practice another concept: co-location, combined with load balancing.

Let’s go back to Netflix on this.

Before streaming, Netflix was known for their online DVD rentals, and specifically their vast supply. Initially they didn’t have very many warehouses where they stored, shipped, and received the DVDs (and eventually Blu-Rays).

But as their business expanded, they branched out and opened new warehouses across the country, and eventually across the world. They didn’t exactly have a lot of choice in the matter: one warehouse can only ship so many DVDs, in part because there is a ceiling (quite literally, actually) to how many discs can be housed in a warehouse, and how many people can be employed to package and ship them out and process returns.

This opened up new benefits for both Netflix and their customers. By having warehouses elsewhere, more customers were able to receive their movies faster than previous since they were now closer to a Netflix warehouse. More warehouses also meant Netflix could process more customer requests and returns.

Their streaming service was no different. At the start there were not many customers streaming their movies. In large part because there weren’t many options. I think X-Box Live was the only option initially, and you needed a Live Gold subscription (and still do, I think) to stream Netflix. So having their streaming service coming from one or two data centers wasn’t a major concern.

That changed quickly.

One of the precursor events was Sony announcing support for Netflix streaming on the PlayStation 3 in late 2009. Initially you had to request a software disc as the Netflix app wasn’t available through the PlayStation Store – I still have mine somewhere. And the software did not require an active PlayStation Network subscription.

Alongside the game console support was Roku, made initially just to stream Netflix. I had a first-generation Roku HD-XR. The device wasn’t inexpensive, and you needed a very good Internet connection to use it. Back when I had mine, the highest speed Internet connection available was a 20Mbps cable service through Time Warner. Google Fiber wasn’t even on the horizon yet.

So streaming wasn’t a major problem early on. While YouTube was supporting 720p and higher, most videos weren’t higher than 480p. But as more people bought more bandwidth along with the Roku devices and game consoles, contention started to build. Amazon and Hulu were also becoming major contenders in the streaming market, and additional services were springing up as well, though Netflix was still on top.

So to get the regional ISPs off their back, Netflix set up co-location centers across the country and in other parts of the world. Instead of everything coming from only one or a few locations, Netflix could divide their streaming bandwidth across multiple locations.

Load balancing servers at Netflix’s primary data center determined which regional data center serves your home based on your ISP and location – both of which can be determined via your IP address. Google (YouTube), Amazon, and Hulu do the same. Just as major online retailers aren’t shipping all of their products from just one or a few warehouses, major online content providers aren’t serving their content from just one or a few data centers.

This significantly alleviates bandwidth contention and results in better overall service for everyone.

* * * * *

But let’s get back to the idea of Net Neutrality and why what it’s proponents expect or demand isn’t possible. Though I feel I’ve adequately pointed out how it would never work the way people expect. So now, let’s summarize.

First, ISPs do not have enough bandwidth to give each customer their full bandwidth allocation 24/7. That alone means Net Neutrality is dead in the water, a no-go concept. ISPs have to engage in traffic shaping, along with employing different pricing structures to keep a few customers from interfering with everyone else’s service.

Unfortunately the fearmongering over the concept has crept into the public consciousness. With Net Neutrality now officially dead at the Federal Communications Commission – despite attempts in Congress and at State levels to revive it – a lot of people are going to start accusing ISPs of throttling for, likely, any amount of buffering trying to watch an online video.

Anything that might look like ISPs are throttling anything will likely result in online public statements from customers accusing their ISP of nefarious things. Because people don’t understand the structure of the Internet and how everything actually works. And the structure of the Internet also spells doom for the various demands on ISPs through “Net Neutrality”.

You must be logged in to post a comment.