Recently I discussed my move from VMware’s ESXi virtualization setup to Proxmox. In that article, I discussed eventually migrating everything from the existing setup – an old HP Z600 with dual Xeon E5520s – to a new setup.

Or at least newer:

- CPUs: 2xAMD Opteron 6278

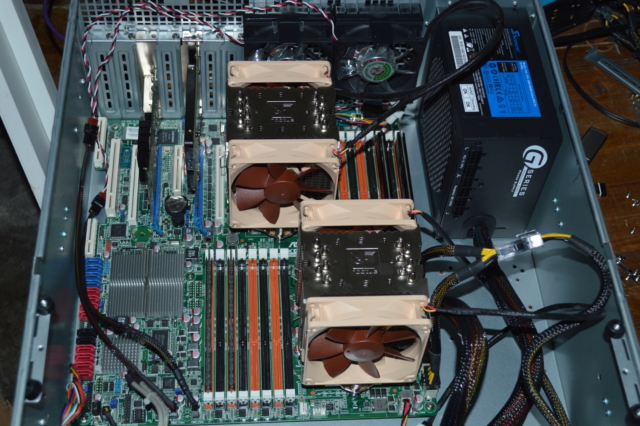

- Cooling: Noctua NH-U9DO A3

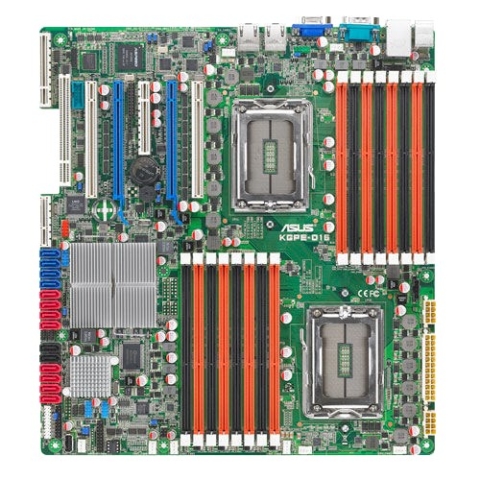

- Mainboard: ASUS KGPE-D16

- RAM: 32GB DDR3 (not ECC)

- Chassis: PlinkUSA IPC-E450B (also available in red or blue)

- Storage: Samsung 850 EVO M.2 500GB

Eventually I’ll be putting more more memory into this, but with DDR3 prices suffering the same fate as DDR4 right now, it’ll be interesting. I’m still looking to add another 16GB (2x8GB) to max out the ECC RAM on my NAS. Perhaps some more eBay shopping is in order. Anyway…

The Opteron 6278 is a Bulldozer processor (like the FX-8100 series) part of the Interlagos lineup. It has 16 cores at 2.4GHz, can turbo up to 3.3GHz (full-load turbo is 2.7GHz), 115W TDP, and was released in 2012. There are mainboards that can take four (4) of these. As tempting as that was, I didn’t need or want to go that far. The dual-processor mainboards were expensive enough. As were the processors – they still go for more than 100 USD each.

The dual Xeon E5520 gives 16 logical processors – 4 cores each with HyperThreading. The dual Opteron 6278 gives 32 cores, more than doubling my current processor capacity – HyperThreading isn’t the same thing has having two physical cores.

While ECC is recommended, the mainboard and processors support non-ECC RAM, up to 8GB per module, 64GB per processor. Same if using unregistered ECC RAM. If using registered ECC, it supports up to 16GB per module, 128GB per processor.

Speaking of eBay

I acquired the processors and mainboard through eBay. About the only place I wasn’t going to spend an arm and a leg for the hardware. Though I still kind of did. And I had a couple unexpected extras with the mainboard.

The seller shipped it with two Operon 6128 processors – 8 cores each, part of the “Magny-Cours” lineup released in 2010. But the bigger surprise was discovering it also came with an ASUS ASMB4 iKVM module.

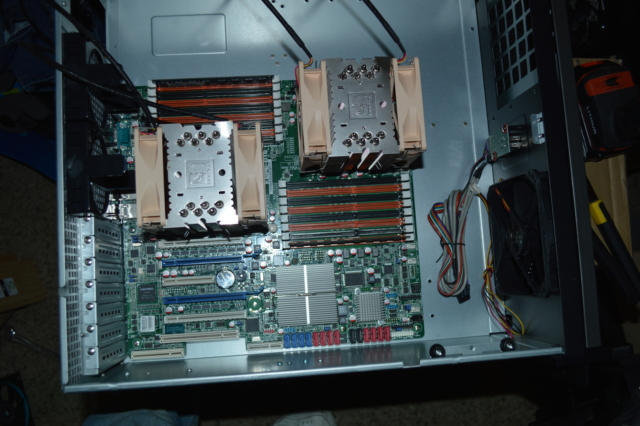

Dual sockets means dual 8-pin EPS sockets. The power supply I was using for the initial testing had only one EPS plug. Micro Center to the rescue with an 8-pin EPS Y-splitter.

But the mainboard proved to be a little bit of a pain.

My initial testing was with just one processor. Mainly because I had only one adequate cooler lying around that I could stick to it – for those wondering, it was the AMD stock cooler they distributed with the FX-8350 when I bought it in 2013. But despite every attempt – resetting CMOS, even popping the battery (which I would later discover was dead) – I couldn’t get the damn thing to POST.

But several steps managed to get it to respond. I’m not sure how, but at least it worked.

First I removed everything from the mainboard – no CPUs, no RAM – and attempted to power on the board. As expected, nothing. So I seated one of the 6128s – no heatsink, as this was just a test – and attempted to power on. One long, two short beeps: RAM not detected. Finally getting somewhere!

Replaced the 6128 with one of the 6278 processors and attempted power on. Same result. Added in the pair of RAM modules in the slots the manual suggested, added the heatsink and fan for good measure. And it responded and POSTed.

And when I got into the BIOS setup screen, I discovered the board had already been updated to the latest BIOS revision available. But testing didn’t end there since I had two CPUs to test. The second one worked as well, and I found a second FX-8350 cooler to temporarily use with it to make sure all 32 cores would be detected.

Fedora 27 Live is painful with the onboard VGA. Which uses only 8MB RAM. Wrap your head around that. Hell the first graphics card I owned was a 1MB PCI graphics card. That’s right, not PCI-Express. PCI. That was in a used 486 machine I bought almost 20 years ago.

Selecting the chassis and cooling

Let’s talk abbreviations for a moment.

The mainboard I selected is an EEB mainboard, which is basically an E-ATX board with two sockets. It is a standard E-ATX size: 13″x12″. So I need a chassis that supports that size. There are several desktop chassis that do – my wife’s Corsair 750D being one.

But since I have a rack, might as well go with a rack chassis. To that end, I went back to PlinkUSA. Seems to be a pattern. Specifically looking at their IPC-E450 along with a set of rails. The chassis is 4U and about 18″ long – seems kind of short for a 13″ mainboard, and I do regret in part using that chassis.

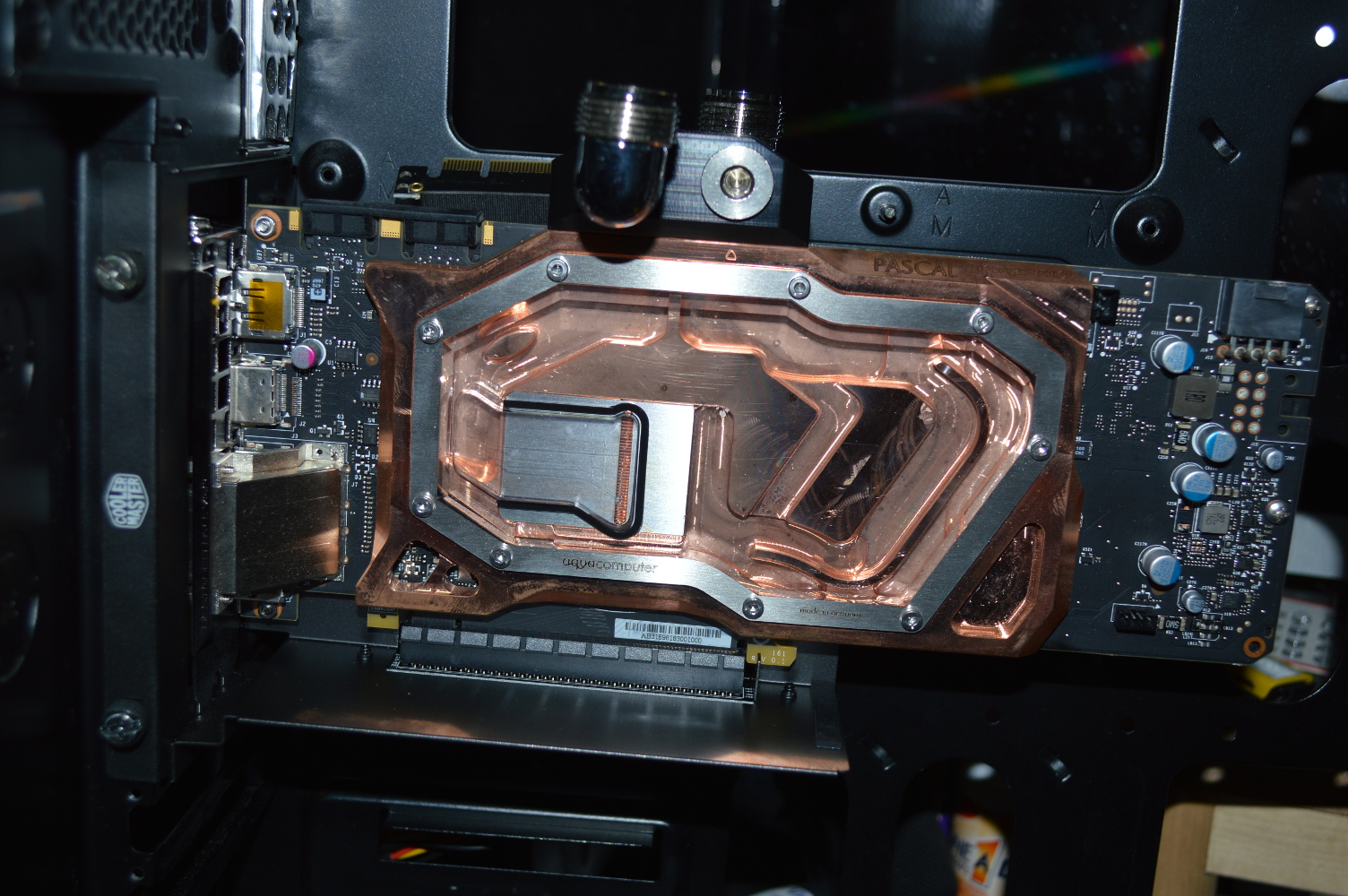

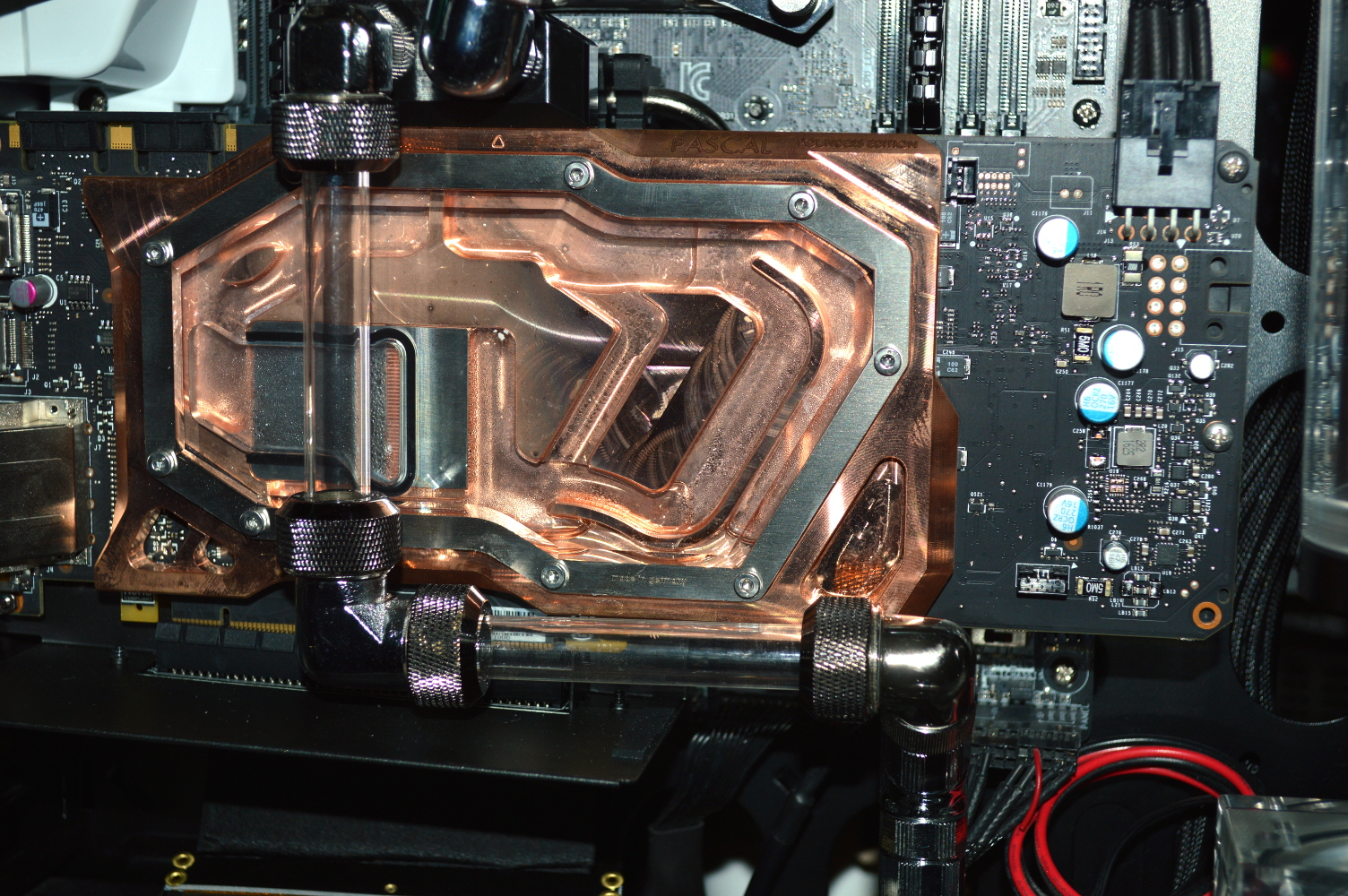

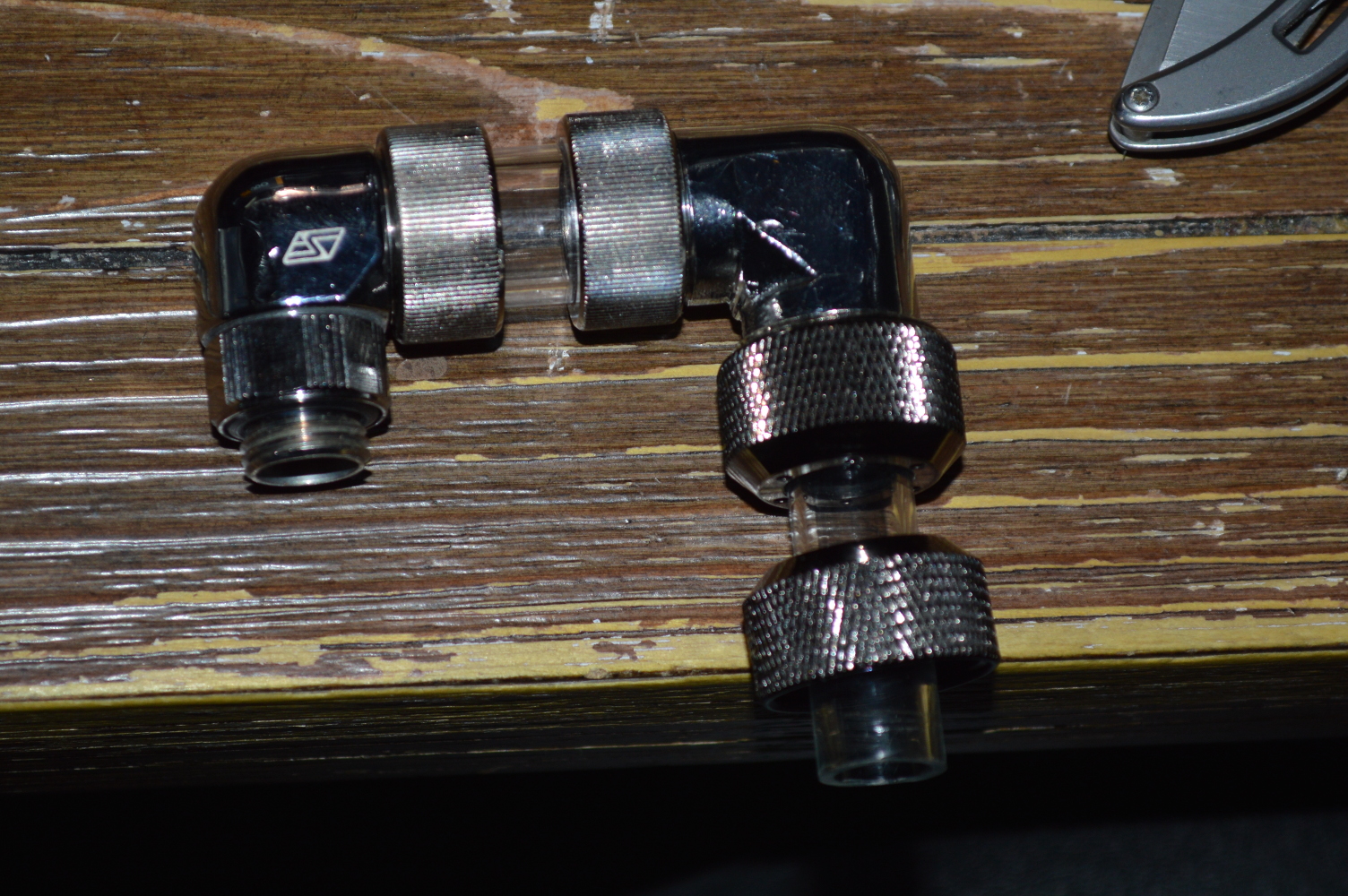

On cooling the Opterons, I had really only two options. Perhaps three. I say perhaps as the last option was water cooling, specifically using two Koolance CPU-380A blocks and the G34 mounting bolts. EK does have a version of their Supremacy for C32 and G34 sockets, provided you can find it, but I still have two Koolance blocks from a previous version of Absinthe and β Ori.

On the air cooling front supporting 115W processors, the options are also very thin unless you try to DIY something. Two companies still have options available: Noctua and Dynatron:

- Noctua NH-U9DO A3 (92mm fan) and NH-U12DO A3 (120mm fan)

- Dynatron A13 (60mm fan) and A14 (80mm fan)

The Dynatron options were intriguing at first, mainly because of their price, but I was concerned with noise. So I decided to spring for the Noctua cooler, specifically the NH-U9DO A3, ordering through QuietPC. The NH-U12DO FAQ says to not use it in a 4U chassis unless you can guarantee clearance.

Storage options and building the system

Due to the layout of the mainboard and the coolers I chose, the chassis basically had to be stripped down to practically nothing. And upon noticing that standoffs were needed for the mainboard, I was glad to go with the 92mm fan Noctua cooler instead of the 120mm fan cooler. Again, don’t use the 120mm version in a 4U chassis unless you can guarantee clearance.

Stripping the chassis down to nothing also meant no place to mount storage. While I’ve had loose SSDs in builds before, I didn’t want the extra cables if I could avoid it. But since this mainboard predates M.2 slots, I couldn’t take the SSD out of its M.2 to SATA enclosure. So what to do instead?

The only other option, then, was using a PCI-E card for 2.5″ SATA drives. Getting rid of cables isn’t the only reason for this. I’m using a Samsung 850 EVO M.2, which is a SATA III SSD, and the SATA ports on the mainboard are only SATA II. So the PCI-E card will at least allow the card to achieve almost it’s full throughput. The bottleneck isn’t significant, certainly not worth paying twice as much for the x2 card to alleviate.

For networking I grabbed a dual-port 10GbE SFP+ card left over from my attempt at a custom 10GbE switch. Currently only one of the ports is plugged into the switch, but the other will be plugged up once I get cables for that. Yes, cables. Plural. As the only spare cable I had that could reach is 10m. I need a couple 2m cables – one to alleviate tension on the cable connecting the NAS – along with LC keystone couplers to use the patch panel on the rack.

Initially I had the cards in the blue PCI-E slots on the mainboard, with the 10GbE card in the slot nearest the processors. The system wouldn’t POST doing this, and would only keep resetting when initializing the network card. So I swapped them around and put the SATA card nearest the CPUs.

Finished system

With everything working, I put the system into the rack and installed ProxMox VE – by the way, using DD mode with Rufus works for generating an installation USB.

Once that was installed it was a matter of recreating the four VMs I initially had on the system. With the Docker system, it was easy to recreate the Docker containers from backups. The Plex server was easy to restore as well.

So what do I have planned for this?

I wanted more processor room to run small virtual clusters with Apache Mesos, Docker Swarm, MPI, and things like that. And I may pull the Z600 machine back online for a similar purpose rather than getting rid of it completely. Whatever I do will likely be the subject of future articles, so stay tuned.

You must be logged in to post a comment.