Build Log:

Since the articles for this project are still getting hits, I figured it’s time to follow-up and talk about what ultimately happened with this project.

In short: nothing really happened with it. I’m not sure if it was the SATA port multiplier, or the eSATA controller or cable, but for some reason it just didn’t want to stay stable.

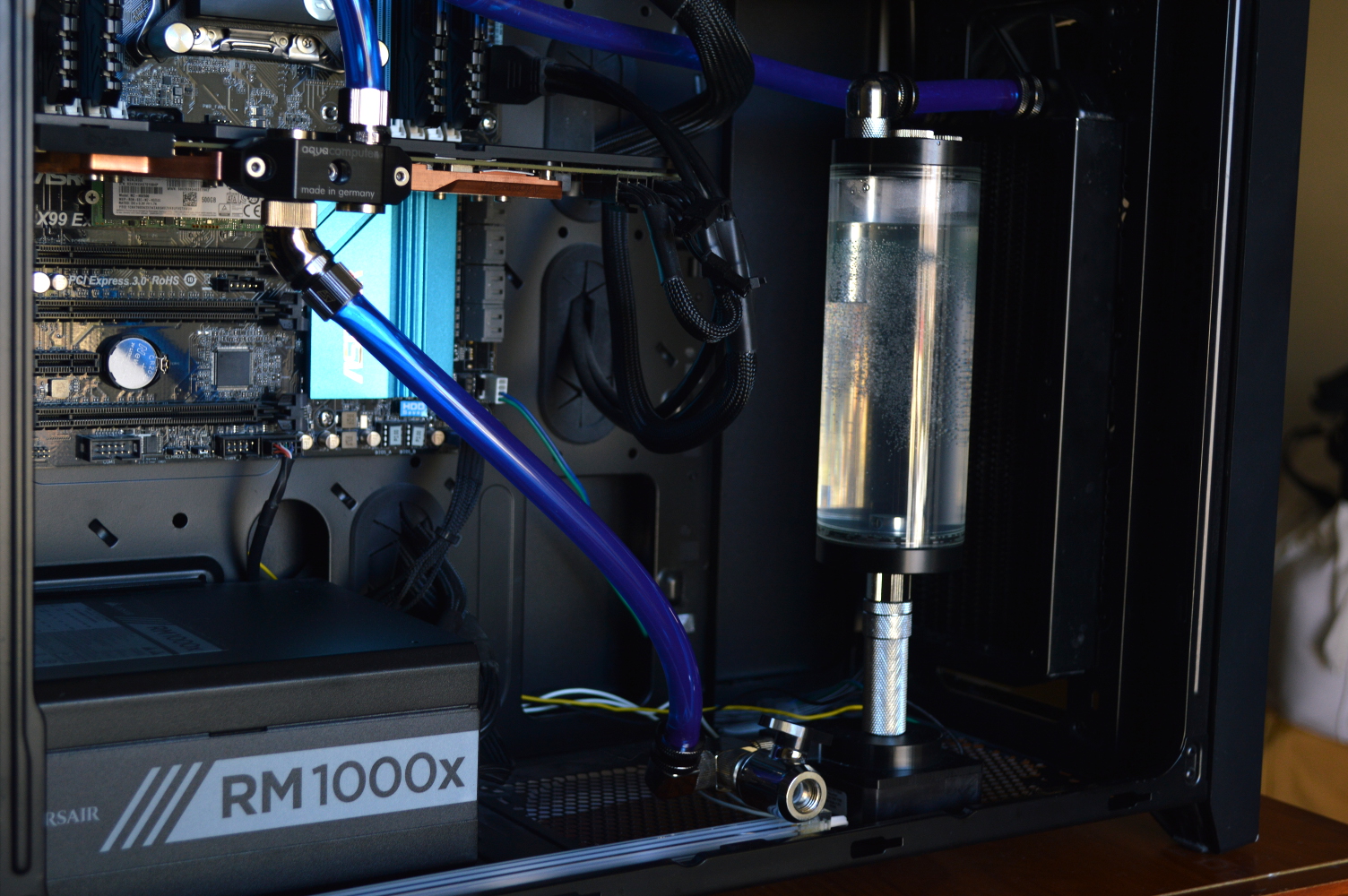

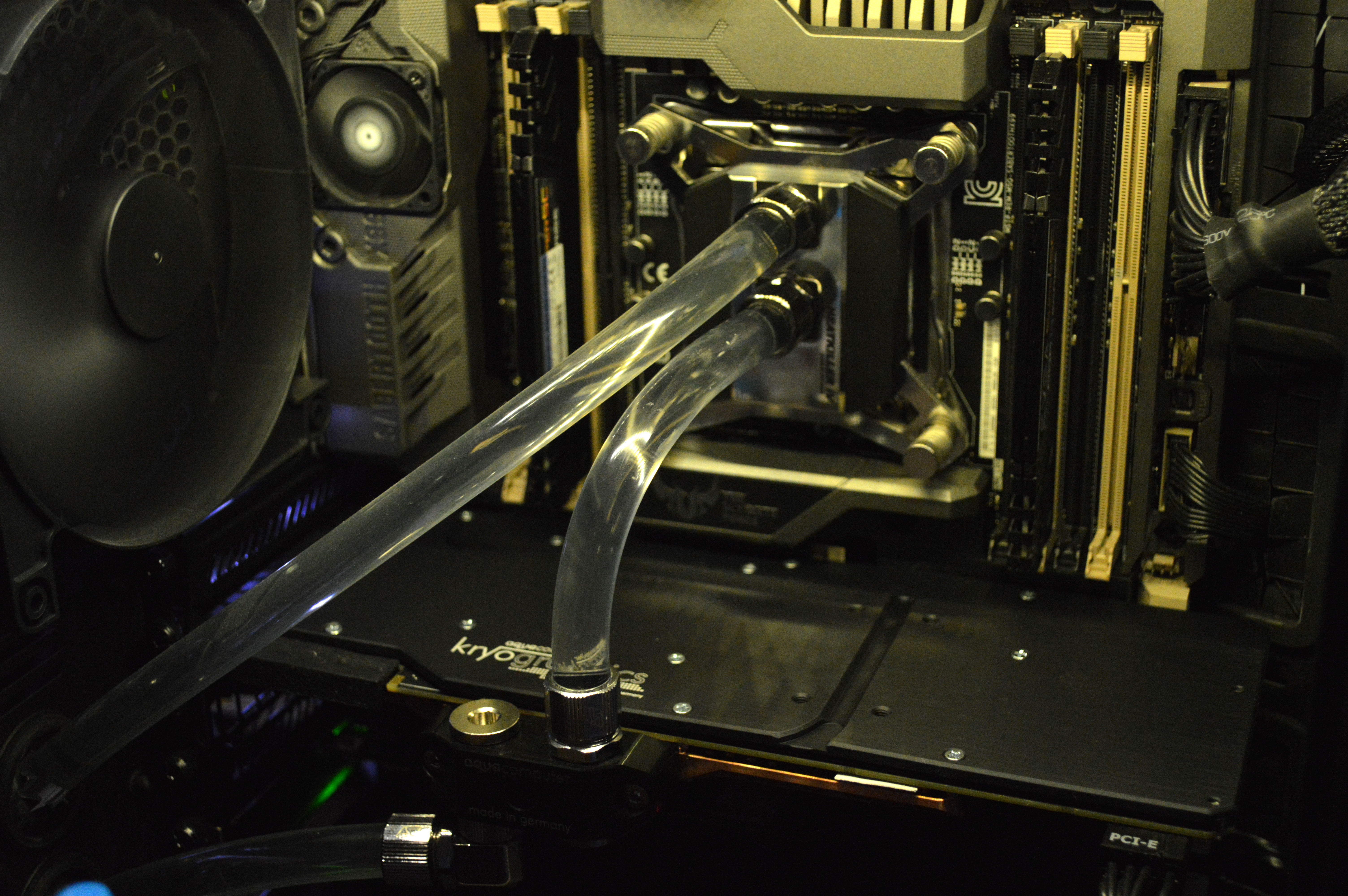

But I did keep to using an external eSATA hard drive as my primary drive instead of relying on something inside the case. This was in part due to the amount of water-cooling that was inside the chassis, the Corsair Obsidian 750D.

So ultimately I gave up on this project. There were too many additional complicating factors that, conspiring together, would not allow this project to function the way I hoped. The last update to this project was posted almost 2 years ago. The four WD Blue 1TB hard drives are now inside my primary system, in a chassis that properly supports a multi-HDD setup: the NZXT H440. The 60mm Noctua fans were moved to other systems, including a NAS I built into a 3U chassis.

And the custom chassis currently sits around empty while I decide what to do with it. I have a couple ideas in mind, and I might see if Protocase can cut just a new front and back for this for when I do repurpose it.

In short this project turned out to be an exercise in overthinking with heavy doses of inadequate research and consideration for other options. A heavy desire to do something custom overrode any consideration for whether that was the best course.

Back to the beginning

The path to this project started with an experiment on whether an external eSATA enclosure could be used as a boot device. I had little reason to think it wouldn’t work, but I couldn’t find an answer to the question by anyone who’d actually done it. I speculated that no one considered trying it or those who did just never wrote about it. And it worked.

Not too long thereafter, I ordered an external RAID 1 enclosure for Absinthe. That freed up a ton of space inside the case and made cable management significantly easier. Absinthe has since been upgraded a few times and uses an M.2 SSD as its primary storage, requiring no cables. The external enclosure is currently unused, but that might change soon to give my wife an alternative for storing her games library.

As I’ve said before, the only way to make cable management easier is by reducing cable bulk in the case.

It did not come without trade-offs. As I mentioned in the article I wrote on it, you are moving cable bulk from inside to outside your system. You’re still reducing it, as you need only one data and power cable, whereas inside the case you needed one data cable per drive and at least one power harness.

The enclosure I bought for my system (not Absinthe) was somewhat problematic. In the aim of moving toward a more robust solution, I purchased two additional single-drive external enclosures to set up in RAID 1 through the SIIG SATA RAID card I had. From observations, I speculated doing that with 4 drives in a RAID 10, but I didn’t want 4 individual external enclosures. I needed to consolidate it to one to keep the cable bulk on the desk to a minimum.

There are 4-drive cabinets available, including a 4-drive version of the RAID cabinet I bought for my wife, but I also decided I wanted to do something custom. Not really for any particular reason, but kind of just for the hell of it.

Rack mounting

The main benefit of rack mounting hardware is consolidation. In one cabinet of however many rack units of height, you can have several systems all together in one vertical space, with a PDU or surge suppressor powering all of it.

Prior to the this project, my storage requirements were quite simple: RAID 1. 1TB hard drives are dirt cheap, and 1TB is more storage than most realistically need for a typical computer (I realize requirements do vary). My wife’s system has seen too many hard drives die from unusual circumstances that I wanted to take precautions such that should that occur again, I’d at least be able to recover her system without having to go through hours of reinstalling the OS, drivers and other things, along with days of her reinstalling her games and other stuff. RAID 1 was the easiest solution: two drives that are mirrored.

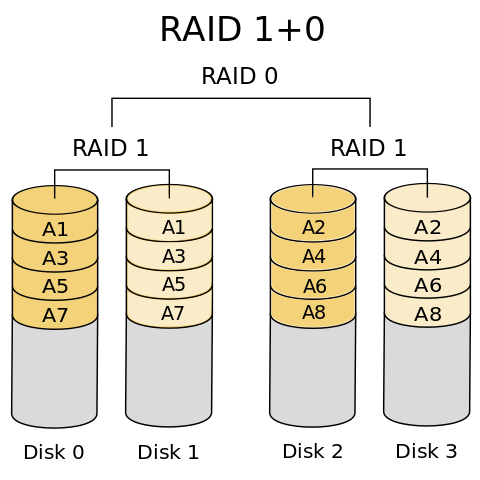

Again, though, the prices of HDDs didn’t escape my notice, so I decided to up the ante for my system by bumping up to RAID 10, which is two RAID 1 arrays with a RAID 0 running across those (image from Wikipedia):

This provides throughput second only to RAID 0, while adding the redundancy of RAID 1, and is recommended over RAID 5 as well due to the increased robustness of the array, among other reasons.

But then, how to house it? I didn’t want to buy or build a 4-drive cabinet for all of this, though I easily could have. I just really wanted to so something custom, so I started researching ideas. I kind of felt like Adam Savage when he talked about all of the research he did with regard to the Dodo that eventually culminated in him creating a Dodo skeleton purely from his research and notes.

The fact I was now starting to delve heavily into rack mount projects and enclosures also pushed me in that direction, mainly because there wasn’t much available for a 19″ rack that met my requirements at the time I started the project (late 2014 into 2015). While trying to figure out what I needed to go custom, I kept looking for available options, because there’s no point recreating what someone else has already done.

Since then, I’ve built a NAS, and that project illuminated a few potential options I didn’t previously consider.

The end result

So back to the original question: was it worth it? That depends on how you measure. I learned a lot going through all of this. I discovered a few things I didn’t know were available.

But the aftermath of a project is what allows you to discover whether you were overthinking things compared to your other options. And in that, I’d have to say the project actually was not worth the time and money spent.

The actual quote for just the enclosure at the time of the order was $355 according to Protocase. I got lucky in that I got an erroneous quote during a glitch in their system, so was able to get mine cut and shipped for a little under $200. One thing that might have cut down on overall expense would’ve been using essentially creating a mesh layout, but that probably would’ve increased the cost of the enclosure by more than the cost of the 60mm fans due to the extra machine time that would’ve been needed. A couple giant cutouts in which you’d install mesh of your own would likely be much better if you don’t want the fans.

So for $355, what is available off the shelf? A lot.

While designing the enclosure, I still kept a watch out for something suitable. I discovered two enclosures that would’ve been perfect but due to availability: Addonics R14ES and R1R2ES. Both were priced at under $300 and came with the interface card, fans and power supply.

One item I pointed out earlier was the 4-drive 1U rack mount enclosure by iStarUSA that is currently available through Amazon for around $300. It also comes with a power supply and fans (only 2x40mm in the rear). I’d need to add the port multiplier and SATA cables. The Addonics and iStarUSA enclosures also allow for easy hot-swap.

So in the end, I continued with the custom enclosure only due to a glitch in their system. Protocase very easily could’ve decided to not honor the price I was quoted — in which case you would’ve read about it here.

But beyond that, your better off looking for something off the shelf that can be used outright or adapted rather than going with something custom. If you don’t want to go with the iStarUSA chassis listed above or any other iStarUSA option, you can find a used rack chassis and adapt that. If you need it for desktop use instead of a rack, find a chassis with removable ears. Then just add hot-swap bays (optional), a port multiplier, and a power supply.

Basically as I’ve said in another build log, exhaust off-the-shelf options before going custom. And to be ready to abandon your custom option if a better off-the-shelf option presents itself.

Again, this project was an exercise in overthinking and inadequate research and consideration.

You must be logged in to post a comment.