Build Log:

Stability for some reason had been an ongoing concern with this setup. What was confusing is that stability wasn’t a concern before I built the rack and got everything racked up. To that end, I had a hypothesis.

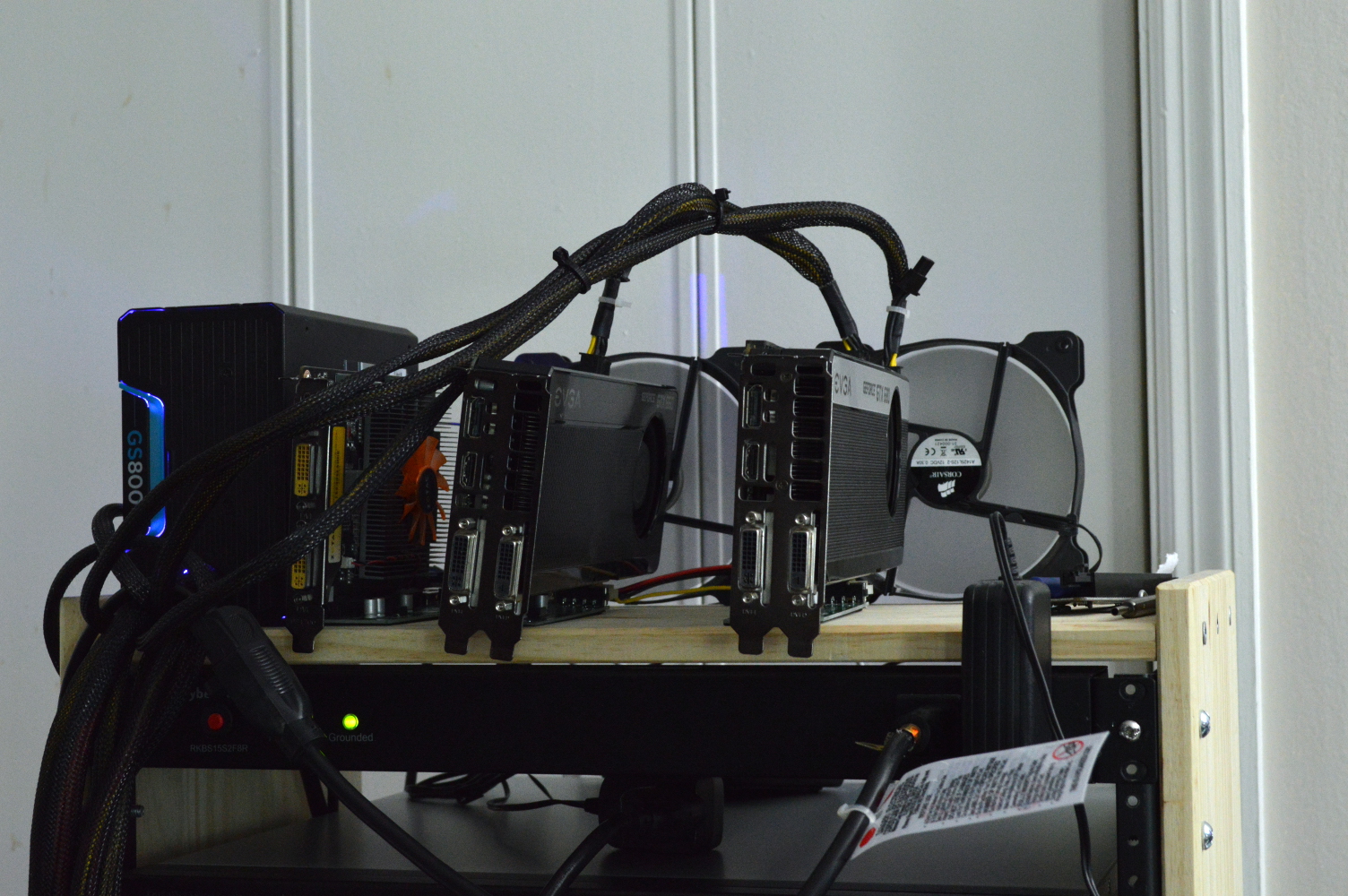

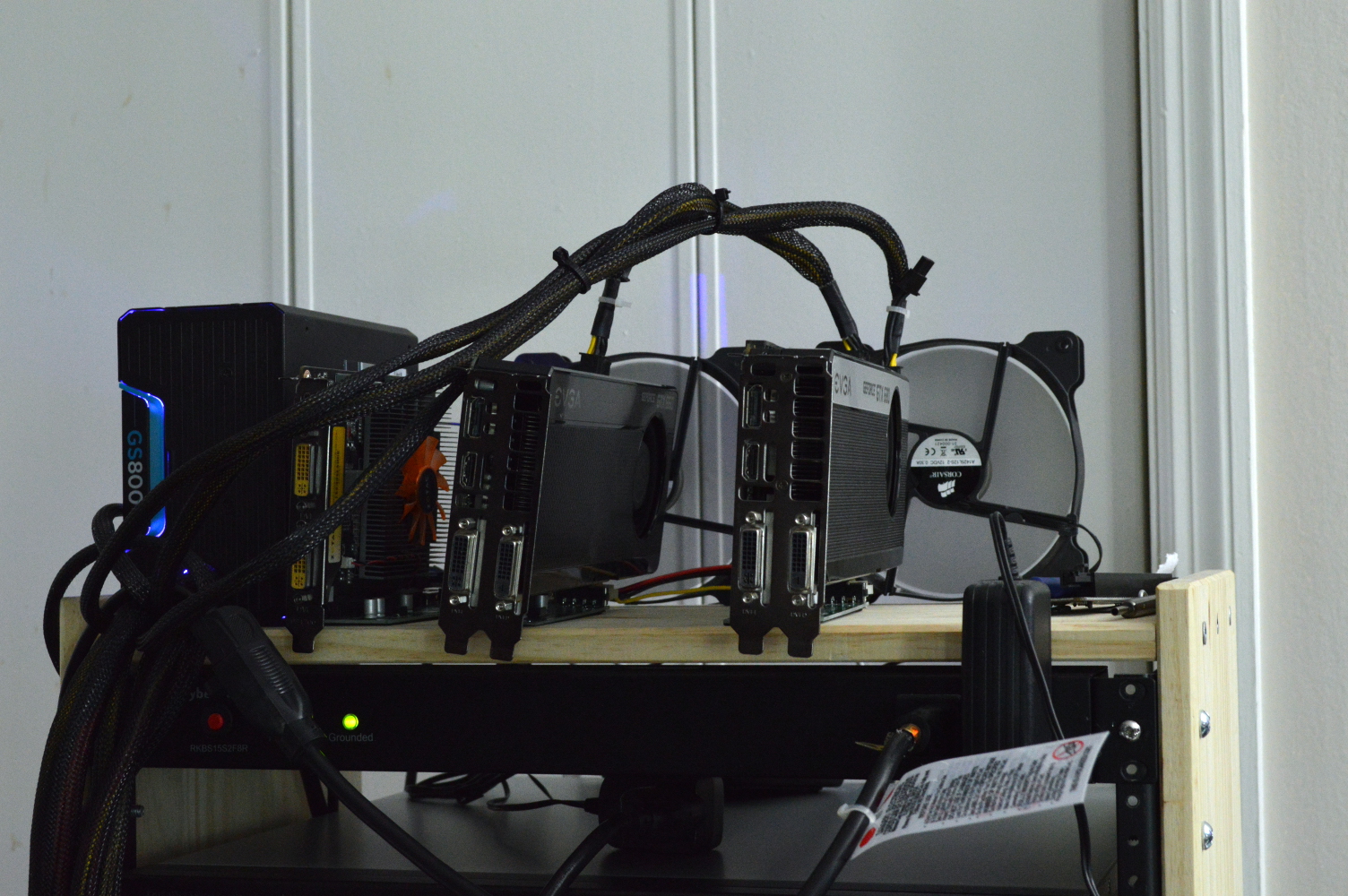

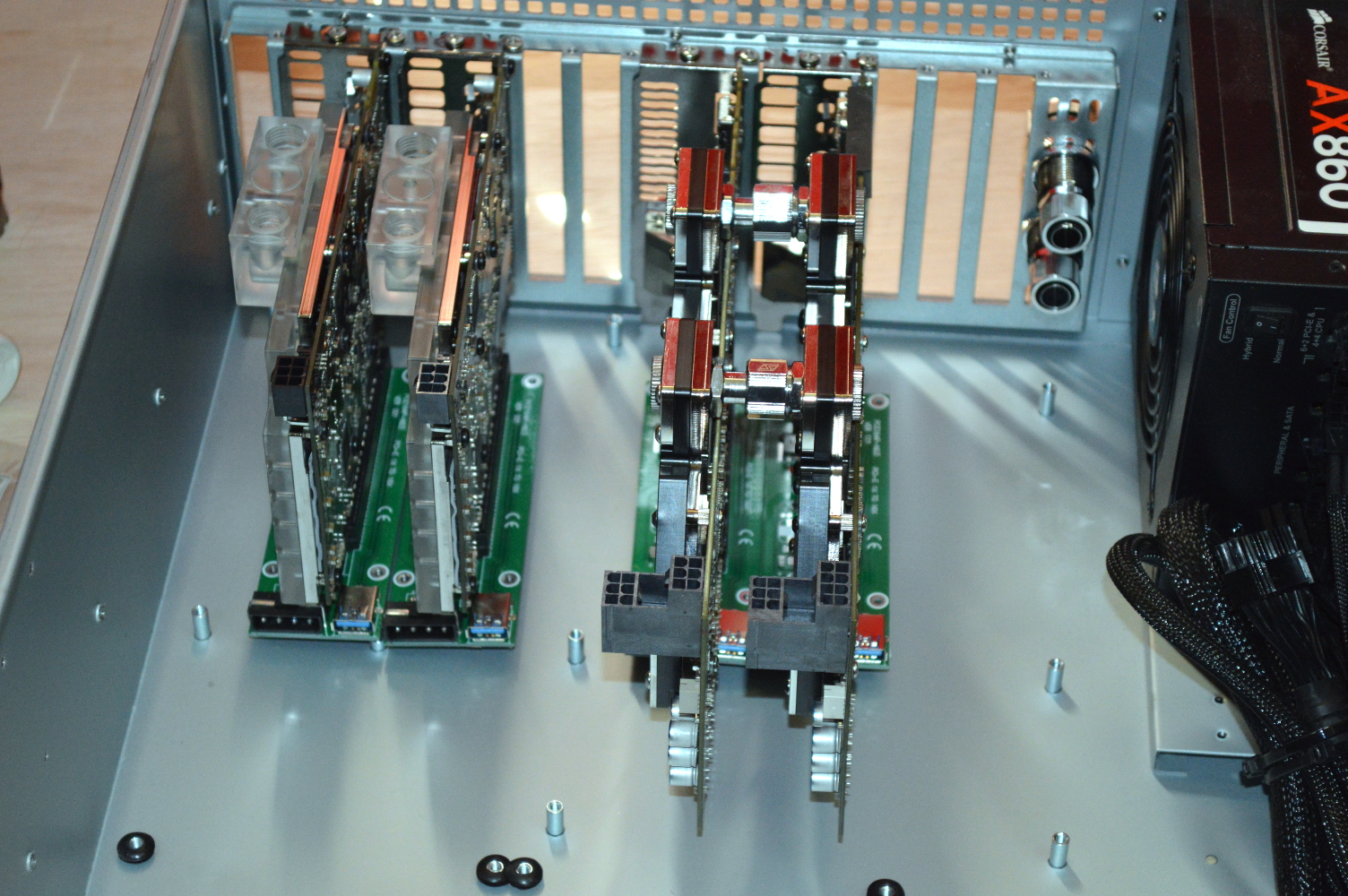

First, this was how I originally had things set up, first outside the 4U chassis, then when I originally mounted them in that chassis.

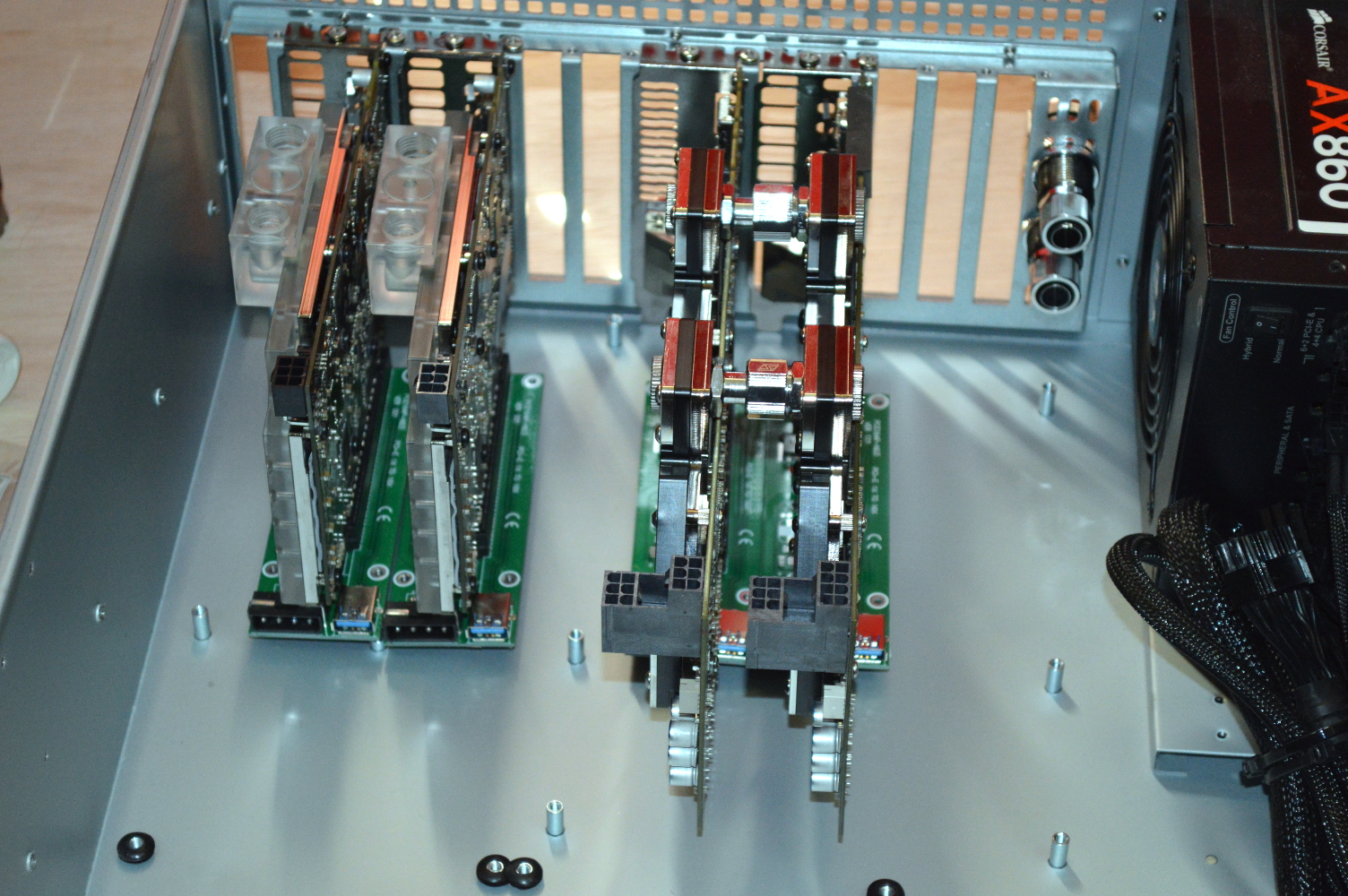

In the to picture, from left to right, is a Zotac GT 620, EVGA GTX 660 SC, and EVGA GTX 680 SSC. In the bottom picture, the middle card is a Zotac GTX 680 AMP Edition.

The graphics cards were just sitting in the extenders. Possible connectivity issues came to mind. The graphics cards were also just hanging off the back of the chassis. And the extenders were against each other next to the cards. When they were originally mounted into the chassis, the cards had some space between each other as well, which likely gave them some breathing room.

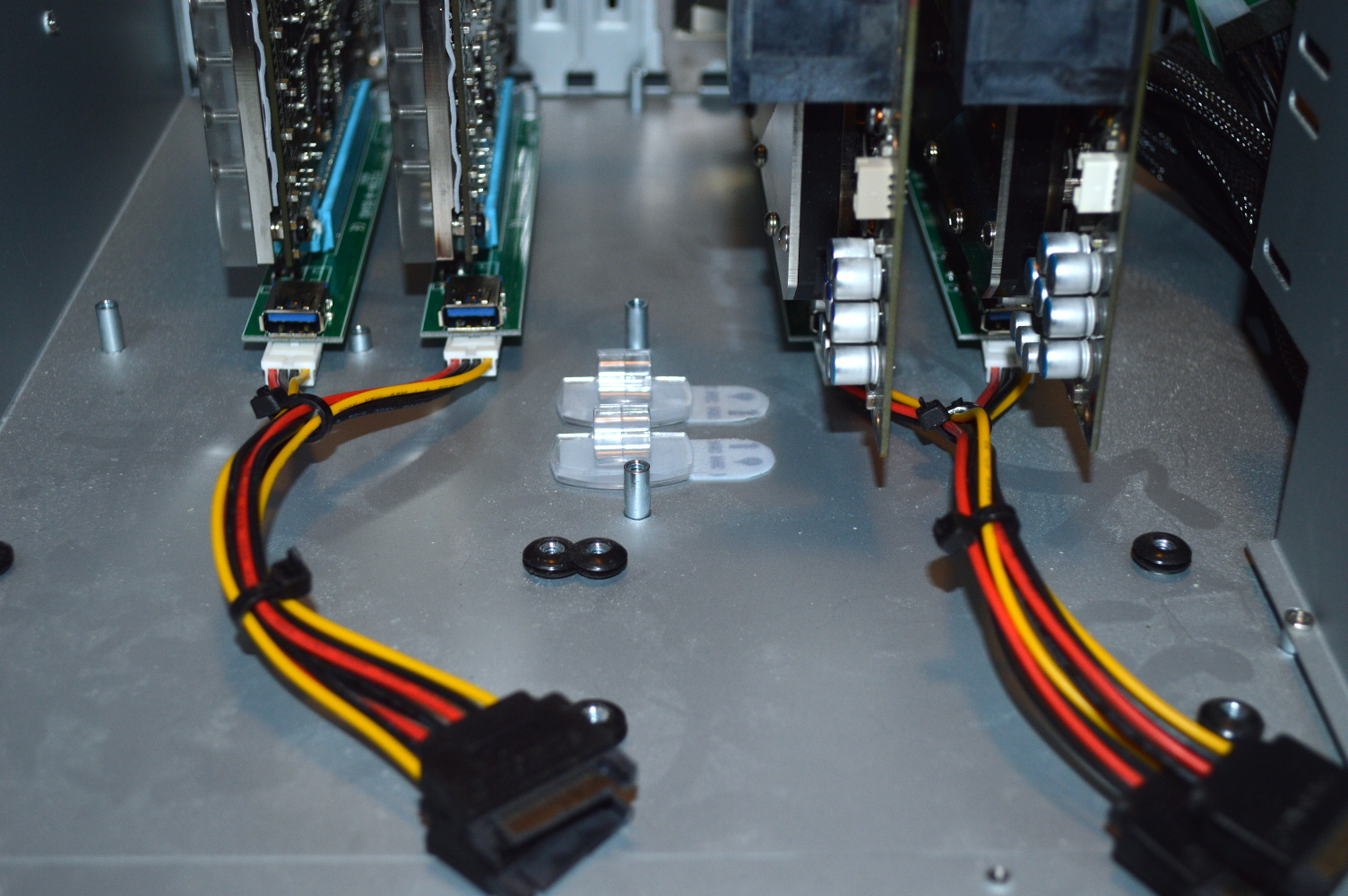

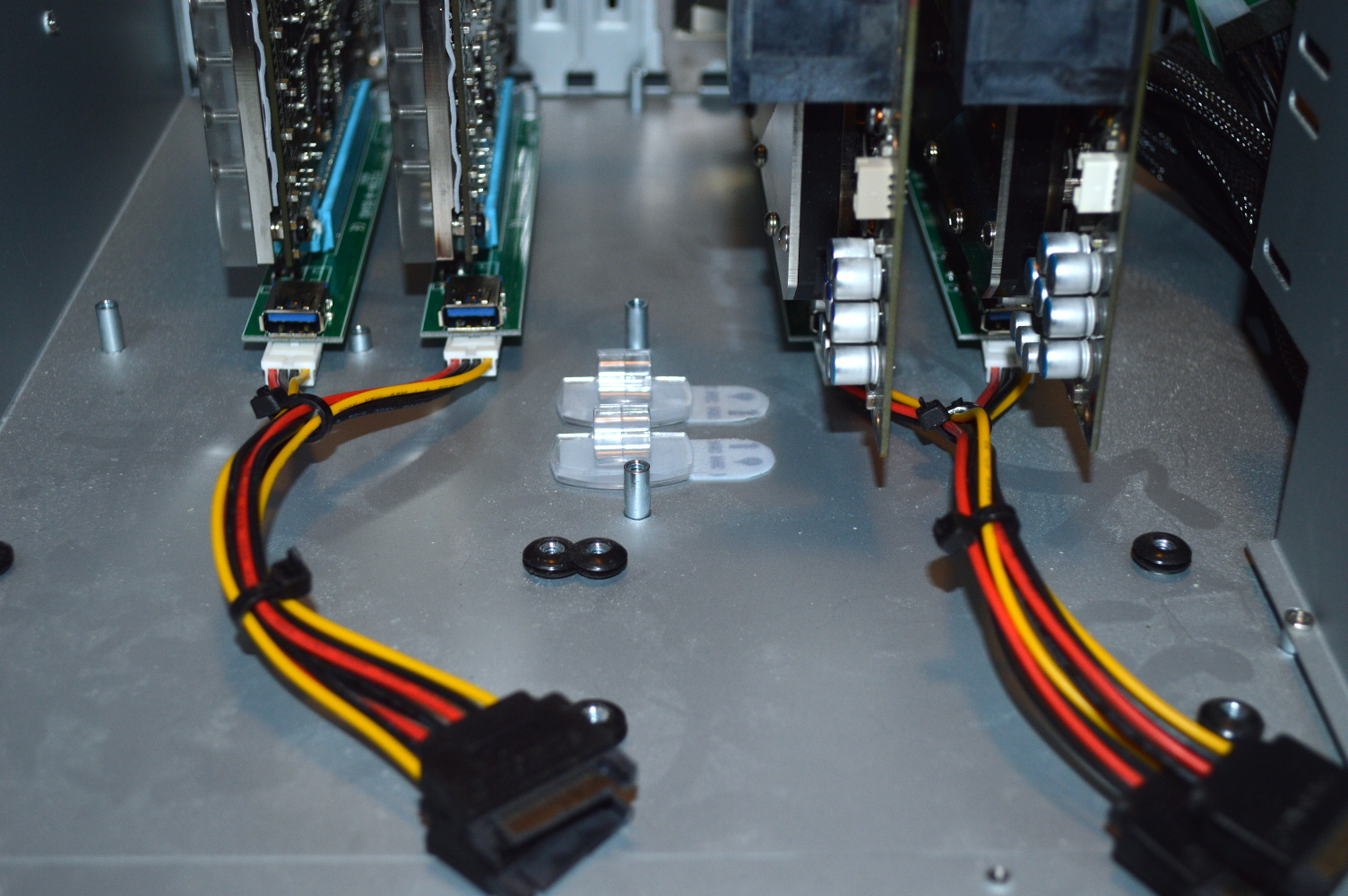

So, again, I wondered if this gave connectivity and stability issues. One of the good things about how popular Bitcoin mining used to be is the prevalence of other PCI-Express extenders, including one that uses a thinner PCB and a 4-pin floppy drive power connector. One is distributed by a company called Vantacor (with the option of a 30cm, 60cm, or 100cm USB cable), and the other by a company called Electop.

So in the end I ordered 4 of the Electop extenders, since they were less expensive and I didn’t care about the cable lengths. The shorter cables, in fact, would be better over the meter-long cables that came with the other ones. So what was the result?

* * * * *

This revisit coincided with a couple other things happening at the same time. First was a build for a radiator box that could be put on the rack to replace the radiator panels that were currently taking up 8U of rack space. The other was maintenance coming due.

As of the time I tore it down, the system had been running continuously for about 7 months. Temperatures were never a problem. Stability was.

With everything apart, I was able to troubleshoot the issue. In tearing everything apart for maintenance, I replaced the x16 side of the extensions to the graphics cards and remounted everything. I had also acquired additional fittings in the time and had left-overs from other projects, so I was able to give the box a much cleaner tubing layout.

With the thinner adapter boards, I was sure this would eliminate them as a variable to stability. And confirm that it wasn’t the only one.

The right-angle adapters on the x1 sides were the other variable. The blue right-angle extension cables I had employed weren’t a problem, but the smaller plugs were. So I ordered another pair and waited for them to arrive. Then to ensure I had a clean setup to work with, I reinstalled Linux to the system.

Except the stability issue didn’t go away.

* * * * *

Let me first fill in some details. Over the time the rack was up and running, there were a few changes made to the setup, in part because stability was being such a problem.

First, I attempted to upgrade the graphics host to use the spare 990FX mainboard. I purchased an FX-8320E and some memory to go along with all of that, with the intent of having a better processor powering the graphics cards.

At the time, the spare mainboard was an ASRock 990FX Extreme6. And it would not POST when it had all four graphics cards plugged in. With two of either the GTX 660s or GTX 680s plugged in, it worked fine. One 680 with one 660? No POST. So I swapped it over to the Gigabyte 990FXA-UD3 mainboard I had in Beta Orionis. It would POST with all of the GPUs plugged in, but it wouldn’t stay stable with all four cards — the driver would report that one of the GPUs was lost or it would freeze the system.

So with the maintenance that came up, I attempted again to get all four cards working with the Gigabyte mainboard, but it wouldn’t have it. Regardless of what I tried, it wouldn’t take.

So I moved the connectors and plugged everything up to one of the X2 mainboards, just to get something stable operating off just one mainboard. And the X2 mainboard I selected was the Abit board that had only one x16 slot with three x1 slots, meaning it wasn’t going to try to do anything SLI-related with regard to the NVIDIA driver — the game servers have been moved into a virtualized environment. And the other X2 mainboard is the MSI K9N4-SLI, and I don’t recall ever really being able to keep all four graphics cards stable on that board, hence why I swapped in the FX board.

Unfortunately the stability concerns didn’t go away. At this point I started to wonder if the stability was the Berkeley software (BOINC) as I didn’t recall seeing any stability concerns with Folding@Home.

* * * * *

At the same time, I planned an upgrade for this. The X2 is a 10+ year-old dual-core processor, meaning it barely scrapes by for what I’m doing. For some BOINC projects it could power everything just fine — e.g. MilkyWay@Home. For others, not so much — e.g. GPUGrid when attempting to use more GPUs than there are CPU cores. For Folding@Home, though, it was holding things back because of how CPU intensive that can be even if you’re running only CPU slots.

So I considered looking at a board that was built for this kind of thing. After discovering the ASRock PCI-Express extenders, I knew that ASRock made mainboards for Bitcoin mining. I was more familiar with their Intel offering and gave serious consideration for it, despite the fact it meant spending almost 400 USD for an adequate setup, specifically the ASRock H81 Pro BTC board. But then I learned they also had FM2+ options. The price difference between the FM2+ and H81 mainboards was only 5 USD on NewEgg favoring the FM2+, but the difference in the price of the processors was more significant.

At minimum I’d need a quad-core processor for this — one core per graphics card — to keep the CPU from being a major bottleneck if I turned this exclusively toward Folding@Home. Intel Haswell i5 processors are still well over 200 USD, but the AMD A8-7600 Kaveri quad-core was only 80 USD on NewEgg. So the choice was clear.

Now one could say that I could go with a Haswell i3-4130, but HyperThreading isn’t the same as a physical core. And it’s the physical cores that will matter for this application more. This makes the AMD APU a lot more cost effective all-around: quad-core at a decent clock speed with integrated GPU. Even if I don’t put the integrated GPU to any tasks, it’ll at least be a GPU separate from the workhorses.

There’s something else as well.

* * * * *

I moved elsewhere in the KC metro. And as part of the move, I completely tore down the rack and tore it apart completely. The cabinet is no more. I do still have the rails, so I can build another cabinet. But I went with a different plan.

On the Linus Tech Tips forum, a member asked about Mountain Mods. I mentioned that I’d used one of their pedestals to make a radiator box, but that I’d also considered “moving another setup I have into one of their Gold Digger chassis”. Not long after the move, I took advantage of some freed up PayPal credit to order the Ascension Gold Digger. I would’ve preferred the U2UFO Gold Digger so I’d be able to have the radiators sideways (the Ascension keeps them vertical) but I needed the dual power supply support.

And that’s because I figured out the stability problem: power.

It sounds odd, especially given that the stability problems only appeared with BOINC and never with Folding@Home. But I think Folding@Home was never taxing the cards to the point where they required more power, while the BOINC jobs were. And they were likely sending the 4-card setup beyond the power threshold that the AX860 could provide.

OuterVision’s power supply calculator puts 2xGTX-680 and 2xGTX-660 graphics cards at having about 800W power draw and recommends a 1,000W power supply to cover it. And the GTX 660s and GTX 680s are all factory overclocked, meaning combined they are likely exceeding the AX860’s capability.

I considered getting a 1200W power supply or larger, or swapping out for the 1000W power supply in my personal computer. But then I realized that I still had the Corsair GS800 laying around. I decided to stick with the AX860 and add another power supply along with it. The extra 60 USD for the larger chassis that supports two power supplies is worth it in that instance over saving 60 USD on the chassis but spending another 200+ USD on a better power supply. I have an Add2PSU from the original setup that I can use to synchronize the power supplies.

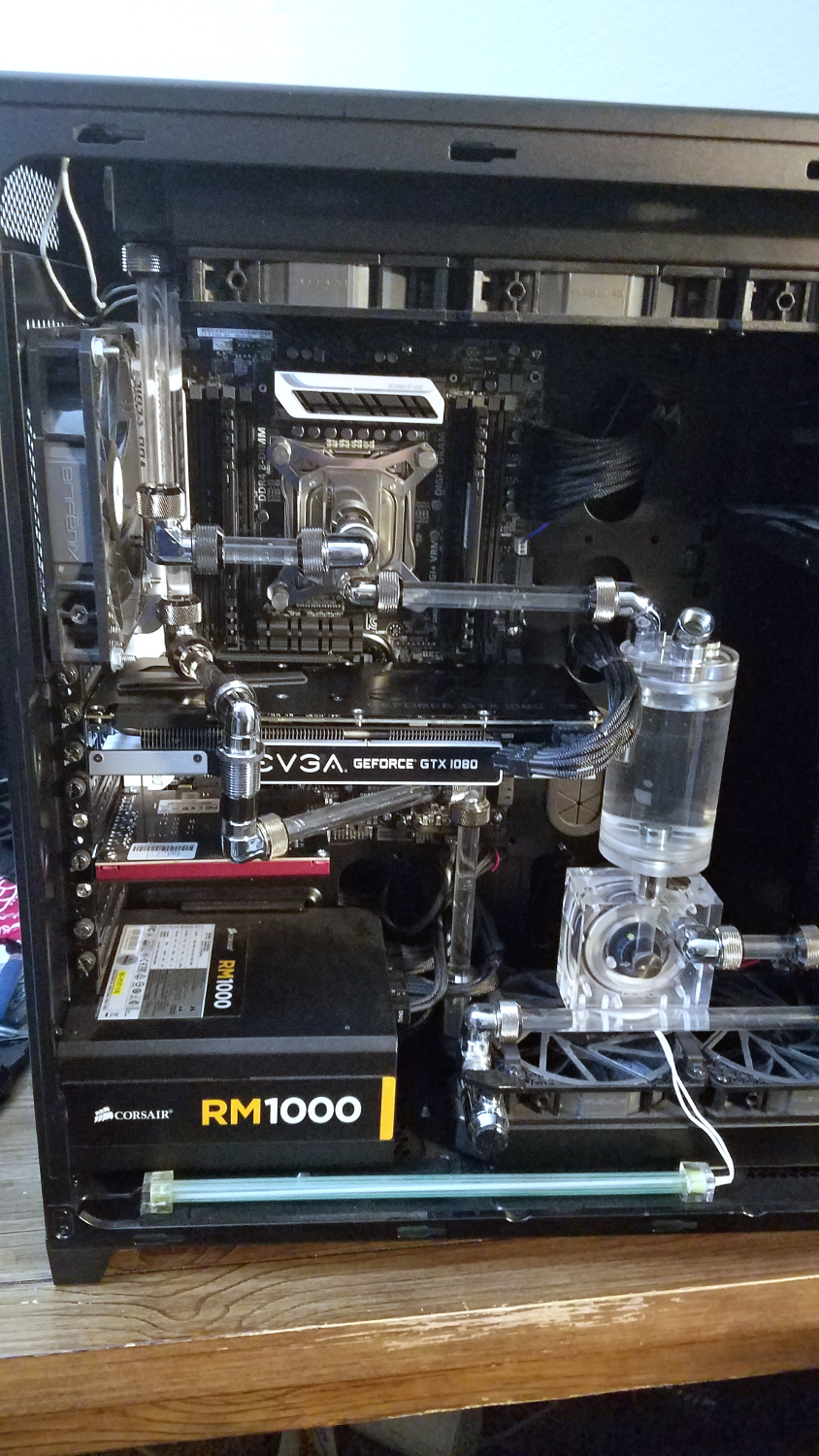

So after settling into the new apartment more, I bought a lot of distilled water for flushing blocks and radiators, and went to town on migrating everything into the new setup.

* * * * *

While I bought an ASRock FM2+ BTC board and an A8-7600, I didn’t go with that setup initially. Instead I stuck with the FX-8320E and the Gigabyte 990FXA-UD3 board. And I kept with the Noctua CPU cooler for the moment while keeping my sights set on swapping in a water block later.

And I discovered that I need to rethink the Koolance PMP-420:

Yes that pump. The one I declared to be too noisy to be useful. Well turns out that if you suspend it on some spongy material and let all the air get out of the loop, it is actually not too bad in the noise department. Still not sure if I’d want that pump in a system that is right next to me, but in a good chassis that is padded with noise-absorbing material, it likely wouldn’t be a major problem. It definitely fits this use case, though as the vertical radiators only add to the resistance in the loop, making a more powerful pump necessary.

So with everything assembled into the Ascension Gold Digger, the graphics cards hanging from above — though technically only two really needed to be hung up there — and all the tubing connected up, the system was brought online for the first time in months. The cards were divided: the two GTX 680s were on the AX860 along with the pump and fans, and the two GTX 660s were on the GS800 with the mainboard.

Initially I had Folding@Home running on it just to get some kind of stress going on the loop and the system. Then I turned to BOINC to see if the stability issues would continue to manifest. But unfortunately I wasn’t able to get MilkyWay@Home working on Linux. The GPU jobs just kept failing with “Computation error”. So I installed Windows 10 Pro to the system and BOINC and let it run.

And not long after that, the mainboard seemed to have died. The system completely locked up and refused to POST after resetting it. I’m not sure whether the power delivery overheated and shut everything down or something else. I was basically forced to swap in the Kaveri board at that point, and I swapped it back to Linux.

Except the stability problem didn’t go away. BOINC still refused to remain stable. It would hard lock after a few hours, but thankfully resetting it got it to POST and boot. And Folding@Home again never saw the same problems. So, I guess this will be relegated entirely to Folding@Home.

But the AMD A8 can’t power all four graphics cards for Folding@Home. So I need to split this off into two systems. Thankfully for the Ascension Gold Digger, that’s just a matter of ordering a few more parts to convert it into the Ascension Duality. And there was another issue I needed to address.

Time to revisit Absinthe. Current specs for immediate reference:

Time to revisit Absinthe. Current specs for immediate reference:

You must be logged in to post a comment.