- Nasira – A FreeNAS build

- Nasira – Part II

- Nasira – Part III

- Nasira with a little SAS

- Moving Nasira to a different chassis

- When a drive dies

- Adding an SLOG… or not

- Coming full circle

- Throwing a short pass

- More upgrades but… no more NVMe

- Check your mainboard battery

Ever since first reading about them, I’ve wanted to set up a NAS in my home. They are a great resource for mass storage — pictures, videos, and backups from the home computers. The trouble is that, until recently, having the capability of building a decent one was financially out of reach. Since the upgrade on Absinthe, however, having the Sabertooth 990FX R2.0 board freed up with its 8-core AMD processor, the project can get started.

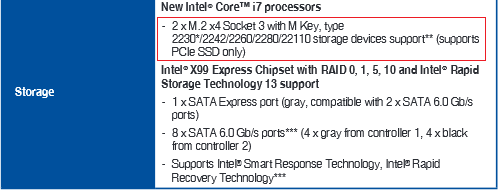

The goal of the project, ultimately, is 32TB of storage in mirrored pairs, resulting in 16TB total accessible storage running on FreeNAS. The Sabertooth 990FX R2.0 board can handle 8 SATA connections and 32GB of RAM. And the AMD FX processor lineup supports ECC memory out of the box.

As with every build log, I will be ranting herein to explain some of my choices and philosophies. You can either read through if you’d like — you might learn something along the way — or just scroll through if you’re just here for the pictures.

CPU: AMD FX-8320E

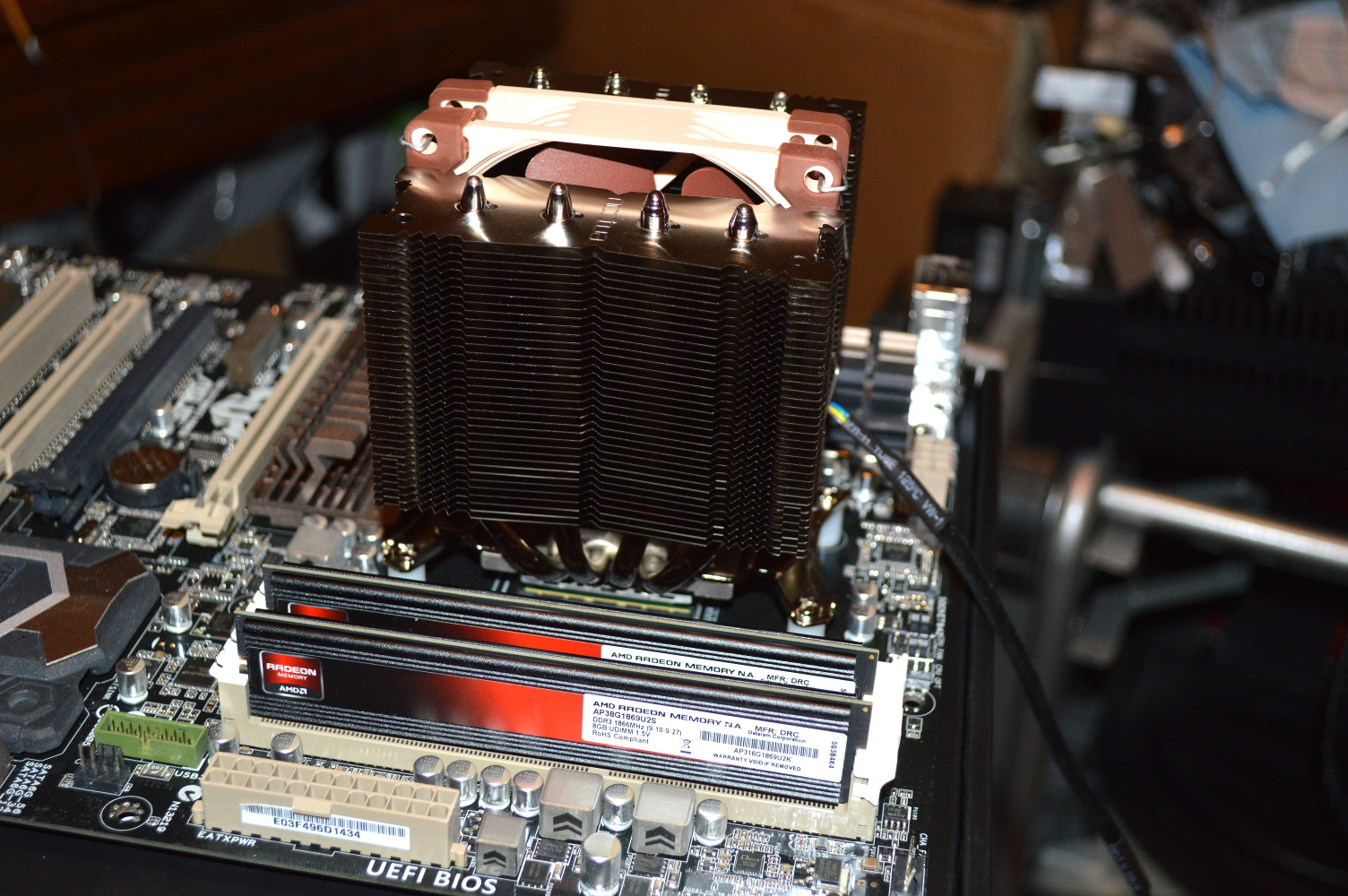

Memory: 2x8GB Crucial DDR3-1600 ECC

Mainboard: ASUS Sabertooth 990FX R2.0

Chassis: PlinkUSA IPC-G3650X 3U

Power supply: Corsair CX750M

Storage: 4TB in 2-drive pairs (32TB raw, 16TB effective)

Storage will be 4TB WD Red drives in mirrored pairs. Initially I’ll start with just one pair since the drives are 150 USD each, then I’ll acquire the additional pairs down the line. One idea I recently discovered that I may take up is mixing brands. This has the benefit of spreading out the risk of drive failures since you’re guaranteed to get drives coming from different lots, though there is always the slight possibility of receiving drives from bad lots. HGST is a brand I’ve seen recommended a bit, though they are more expensive. Seagate is recommended as well, and they tend to be less expensive compared to the WD Red.

As this is a NAS and will be rack mounted, the system will be set up for hot-swap drive bays. Specifically on that, I’m going with two Rosewill hot swap mobile racks that will provide for 4 HDDs in 3 drive bays. I would consider hot swap bays to be a basic requirement of a NAS build depending on how easy it will be for you to remove the drives in question. On a rack system, don’t consider anything but hot swap bays, in my opinion.

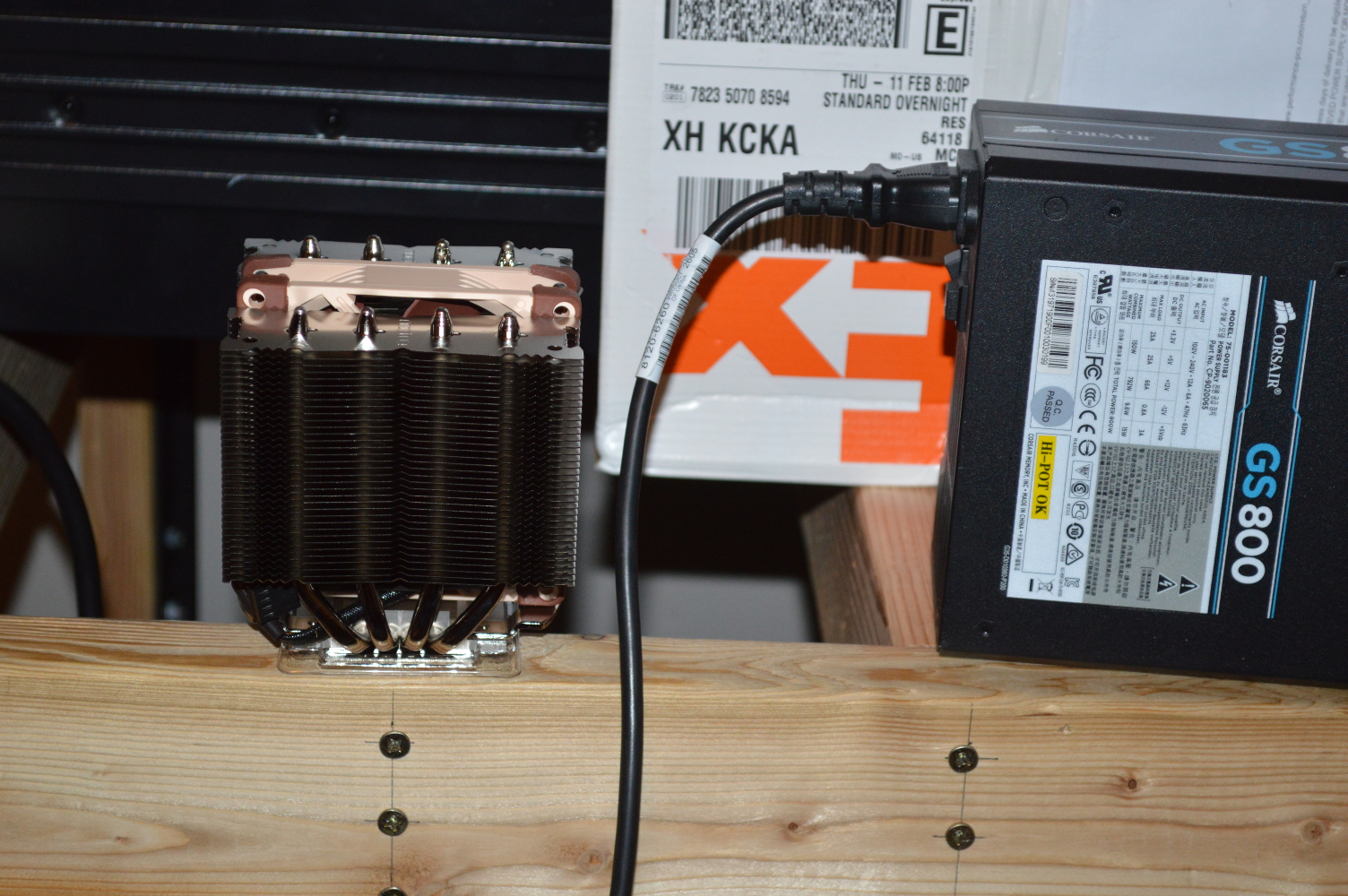

For cooling the CPU in previous server builds, I’ve turned to Noctua for a quiet but effective CPU cooler. And this time I’ll be doing the same with the Noctua NH-D9L. I’m planning to use a 3U chassis, and Noctua advertises this cooler as being fully compatible with a 3U setup.

I’ll be starting with 16GB RAM initially. The memory recommendation is 1GB RAM per 1TB of raw storage space, with 8GB being the minimum regardless of storage for FreeNAS. But if you come in under that recommendation — e.g. you have 32TB raw storage but only 16GB RAM — the difference it’ll make to performance and stability will be based entirely on how your NAS is used and the virtual device setup. Others have not adhered strictly to that guideline and been fine. In general, though, the more memory you can throw at this, the better, and I’ll be doubling this to 32GB eventually.

And as the list above shows, I’ll be using ECC RAM as well.

ECC vs non-ECC

So if I’ve opted for ECC RAM, which everyone recommends for FreeNAS, why am I about to discuss ECC vs non-ECC? Well it’s in light of a recent video on Paul’s Hardware in which he builds a NAS using FreeNAS but doesn’t use ECC RAM. In part this is because he used an ITX board with a Sandy Bridge processor. But several pointed out in the comments that he’s not using ECC. Many acted like Paul’s data was moments from destruction for not using ECC RAM.

ECC memory is heavily recommended though not absolutely required. FreeNAS won’t fail to run if you’re not using it, and your data isn’t under any significantly higher relative risk by not using it depending on your use case. Instead ECC memory is heavily recommended for ZFS simply due to what the ZFS file system does in memory. Which is pretty nearly everything. And as ECC memory can recover from some memory issues, whereas non-ECC memory has no recovery capability, ECC is the obviously better choice.

But the whole ECC vs non-ECC discussion is a bit overblown with regard to ZFS and FreeNAS, almost religious in nature. Doomsday scenarios have replaced objective discussion with many overlooking the fact that data corruption can come from many sources, of which memory is only one. And the examples of how memory corruption can propagate on a NAS are also examples of how it can propagate really anywhere.

I’ll repeat what many have said: ECC memory is better, plain and simple. If it wasn’t, or wasn’t significantly better than non-ECC memory in the systems where it is typically employed, it wouldn’t exist because no one would buy it. What’d be the point?

Matt Ahrens, one of the co-founders of the ZFS file system, is often quoted with regard to this: “I would simply say: if you love your data, use ECC RAM. Additionally, use a filesystem that checksums your data, such as ZFS.” Many have interpreted this as meaning that you must use ECC RAM with ZFS, and a basic misunderstanding of grammar and syntax would allow that misinterpretation. He’s saying to use ECC RAM. Separately he’s saying to use ZFS. He is not saying you must use ECC RAM with ZFS.

In fact, he says quite the opposite in the same post I quoted. That doesn’t mean it’s not a good idea to use it. Absolutely it is. But will your NAS crash and burn and fry all your data, including your backups, if you don’t use it? It’s extremely unlikely you’ll get that unlucky. And if that does happen, don’t be so quick to blame your memory before determining if it might have been something else.

Despite what seems to be the prevailing belief when discussing FreeNAS, memory is not the only source of data corruption. And even if you’re using non-ECC RAM, I’d still say it’s unlikely to be the cause of data corruption. Cables can go bad. Poor-quality SATA cables could increase their susceptibility to a phenomenon called “cross talk“. In one of my systems, a 24-pin power extension cable on the power supply went bad, causing symptoms that made me think the power supply itself had gone bad. Connectors on the power supply to the drives could cause problems, including premature drive death. If power delivery to your NAS is substandard, it could wreak havoc. Linus Media Group suffered a major setback with their main storage server after one of the RAID controllers decided to have a hissy fit before they had that system completely backed up.

A NAS should be considered a mission-critical system. And to borrow my father’s phrasing, you don’t muck with your mission-critical systems. And that includes when building them, meaning don’t cut any corners. Regardless of whether you go with ECC or non-ECC memory, it needs to be quality. The power supply should be very well rated for stable power delivery and quality connections (on which Jonny Guru is considered the go-to source for reviews and evaluations). The SATA or SAS data cables should be high quality as well, shielded if possible (such as the 3M 5602 series). You don’t need premium hardware, but you can’t go with the cheapest stuff on the shelf either. Not if you care about your data.

Bottom line, many have used non-ECC memory with FreeNAS for years without a problem. Others haven’t been so lucky. But whether those losses were the result of not using ECC memory has been difficult to pin down. Again, there are many things that can cause data corruption or the appearance of data corruption that aren’t RAM related.

So again, you may not be under any higher relative risk going with non-ECC memory. Your home NAS box is unlikely to see the kind of workloads and exposures to electromagnetic and radio interference common in business and enterprise environments, and it’s those environments where ECC memory is a basic requirement regardless of what the server is doing.

If you can use ECC, go for it. Your system will be more stable in the end, and it’s not hugely expensive — I’ve seen it for under 8 USD/GB. If you can’t, don’t fret too much because you’re not. Just make sure the RAM you do use is quality memory. And whether you’re using ECC or not, test it with MemTest86 before building your system and re-test it periodically. But regardless of whether you’re using ECC RAM, make sure to maintain backups of the critical stuff.

RAID-Z or mirrored

Now why am I going with mirrored pairs instead of buying a bunch of drives and setting them up in either RAID-Z1 or RAID-Z2? Cost, for one. 4TB drives are currently around 150 USD each depending on brand and source. That’s why I opted to start with a pair of drives, and never considered anything else. As I add additional drive pairs, I can expand the pool to include each new pair until I get up to the ultimate goal of 4 pairs of drives.

Using mirrored pairs also allows you to expand your pool with mirrors of different sizes. You can start with a mirror of 1TB drives, then add a 2TB mirrored pair later, and keep adding until you want to replace the smallest pairs in the pool. The benefit here is you get the additional storage capacity immediately. With RAID-Zx, you wouldn’t see the new capacity until you replaced all drives in the pool.

This is ultimately why you should use mirrored pairs in your FreeNAS pool and not RAID-Z. If you are buying all your drives up front and don’t ever see adding more drives, only replacing existing ones, then I suppose RAID-Z2 will work well, but you should really reconsider using RAID-Z for one simple reason: disaster recovery.

A drive cannot be recovered faster than its sequential write speed will allow. The WD Red has an internal transfer speed of around 150 MB/s. That means a rebuild of a single drive will take about 2 hours per TB for writing contiguous data. You can expect about the same for reading data as well.

How long would a rebuild take? On a ZFS mirror, it’ll take only as long as is needed to re-mirror the drive based on used space. It won’t do a sector-by-sector mirror like a RAID controller. So if you have a 4TB mirrored pair but only 1TB on it is occupied, then it’ll only read and mirror that 1TB. If the writes occur parallel to the reads, then the rebuild will go a bit faster. For RAIDZ, however, it still needs to read all data from the array. The lost blocks are either re-calculated parity blocks or data blocks derived from parity. As such, rebuilding the parity and data means it must traverse everything.

So let’s say you have four drives of 4TB each in two mirrored pairs. You have 8TB total effective space. Let’s assume that 4TB is used, and since ZFS stripes data across virtual devices, we’ll presume an even distribution of 2TB to each drive. If you lost one of the drives, the ZFS drivers would only need to read and write that 2TB, meaning probably about 8 to 9 hours to rebuild.

For RAID-Z1 with those four drives, you’ll have 12TB effective space, of which only 4TB will be used. But it’ll have to read 4TB of data and parity to rebuild the lost drive. For RAID-Z2 with those four drives, you’ll have 8TB effective storage, of which 4TB will be used. How much data do you think will need to be read from the remaining three drives? It’ll actually need to read and process 6TB of data and parity since Z2 uses double the parity of Z1. That will slow things down significantly.

Now we could expect that the rebuild will read the data from the RAID-Zx at the same rate it serves that data for clients. But everything I’m finding does not appear to support this idea. One source I did find, however, actually tried to test rebuild times on RAID-Zx compared to mirrored pairs using 1TB drives. And the results were interesting. At 25% capacity, RAID-Z1 with 5 drives or less and RAID-Z2 with 4 drives were similar in rebuild time to a mirrored pair. Once the capacity got to 50%, though, the difference became more significant.

But the other thing that needs to be pointed out: the recovery times likely reflect no activity on the array during recovery. In mirrored pairs, the performance impact of continuing to use the array won’t be nearly as great. With RAID-Zx, the impact on both read performance and the rebuild is substantial due to the parity calculations.

Again, this is why you should go with mirrored pairs, and why I will be using mirrored pairs. Not only is it easier to expand, the fault tolerance is much better simply due to the faster rebuild times. Sure if you lose a mirrored pair you lose the pool, but if you actually build the array correctly (by ensuring the drives in any single pair didn’t come from a single lot), the chance of that happening is relatively low.

But then, nothing replaces a good backup plan.

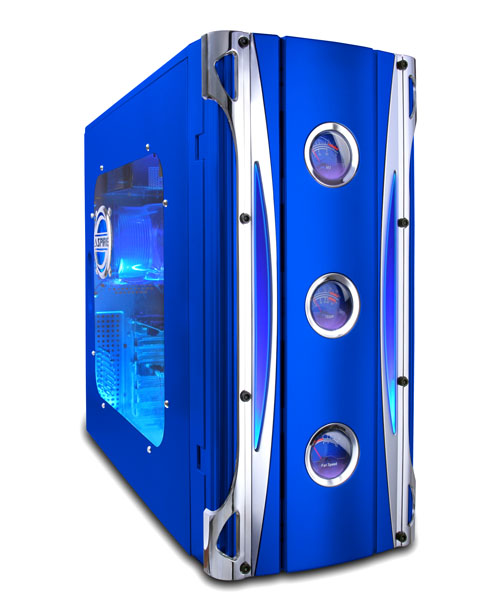

Reviving an old case yet again

This old blue Apevia case has seen the rounds a couple times in my build logs. It’s come in handy for a few things, and this time around it’s serving as Nasira’s initial home.

There are a few reasons for this. For one, I don’t have the final 3U chassis selected. I mentioned a model number above, but I’m not entirely sure yet if I’m going to stick with that or go with a different model. The other model I’m considering is the IPC-G3550, also from PlinkUSA. Both have a double set of three 5-1/4″ drive bays, but the second option is slightly shorter — shy of 22″ deep instead of 26″ for the IPC-G3650X — and uses 80mm fans internally instead of 120mm fans to direct internal airflow.

My experience with FreeNAS is also very limited. I was able play with it in VirtualBox, which I highly recommend. But getting it working in a VM is quite different from getting it working on physical hardware, and the latter is what mattered. I wanted to make sure this would run as expected.

I’m sticking with the onboard NIC for now. I’m aware that Realtek NICs aren’t the greatest, and if I feel I need to, I’ll put in an Intel NIC later, or consider it being the start of a 10Gb Ethernet upgrade. On initial build and testing, I noticed that the Gigabit connection can be pretty well saturated on the Sabertooth’s onboard NIC — I was getting transfer speeds hovering around 100MB/s, around the maximum for a Gigabit link — so unless performance noticeably drops when my wife and I are trying to simultaneously access it, I’m likely not going to be putting in a different NIC.

Up front, my primary concern was observing baseline performance with the mainboard and processor, testing the cooling solution, and making sure everything will play nice with the hot swap bays. In other words, I wanted to eliminate as many points of concern as possible.

Initially I also left the FX-8350 in there. I have an FX-8320E, but it’s currently in another server, and I’m not entirely sure if I’m going to pull it. I’ll let the air temperatures in the chassis be the determining factor. For the GPU, I’m using a GT620 I had laying around — another frequent feature of hardware testing when I’m building systems. For the final build, I’m likely going to use something else, like see if I can diagnose and correct the fan problem on one of my Radeon x1650 cards.

With that, a few observations, and some quick reviews on some of the parts selected.

ECC memory. The mainboard was able to detect the ECC memory without a problem, and MemTest86 was also able to detect that the RAM is ECC. On the FreeNAS forums, a rather arrogant user by the moniker Cyberjock has a thread discussing FreeNAS hardware recommendations in which he says this:

AMD’s are a mess so I won’t even try to discuss how you even validate that ECC “functions”. Not that “supporting” ECC RAM is not the same as actually using the ECC feature. Do not be fooled as many have been. You don’t want to be that guy that thinks he’s got ECC and when the RAM goes bad you find out the ECC bits aren’t used.

I’m going to guess that he’s never used AMD hardware for this.

In reading through a lot of the various posts he’s made in which he tries to espouse himself as being an expert with regard to all things FreeNAS and ZFS, I’ve noticed that he seems to never consider anything beyond the memory as being a source of data corruption. Again the whole ECC vs non-ECC discussion has taken on religious overtones, and Cyberjock has propped himself up as the Pope of the Church of ECC with FreeNAS.

Whether the error correction function is being used requires experiencing correctable memory corruption. But then you end up with a version of the falling tree problem: if a correctable memory error occurs and the memory controller corrects it, did it actually happen?

Saying you can’t know whether ECC actually works on AMD platforms requires speaking out of ignorance or delusion. For one, the most powerful supercomputer in the United States relies on AMD Opteron 6274 processors and ECC RAM. And as the Opteron 6274 has the same memory controller as the FX processors, I think it’s safe to say that it works.

PassMark has also tested the ECC error detection in MemTest86 using an AMD FX processor. I’d say that’s good reason to trust that, at least with the AMD FX lineup, ECC RAM is going to work. After all, I’d think they would’ve reported back any problems.

Further, see my previous point about corruption originating from sources other than RAM. Seems few can think beyond that.

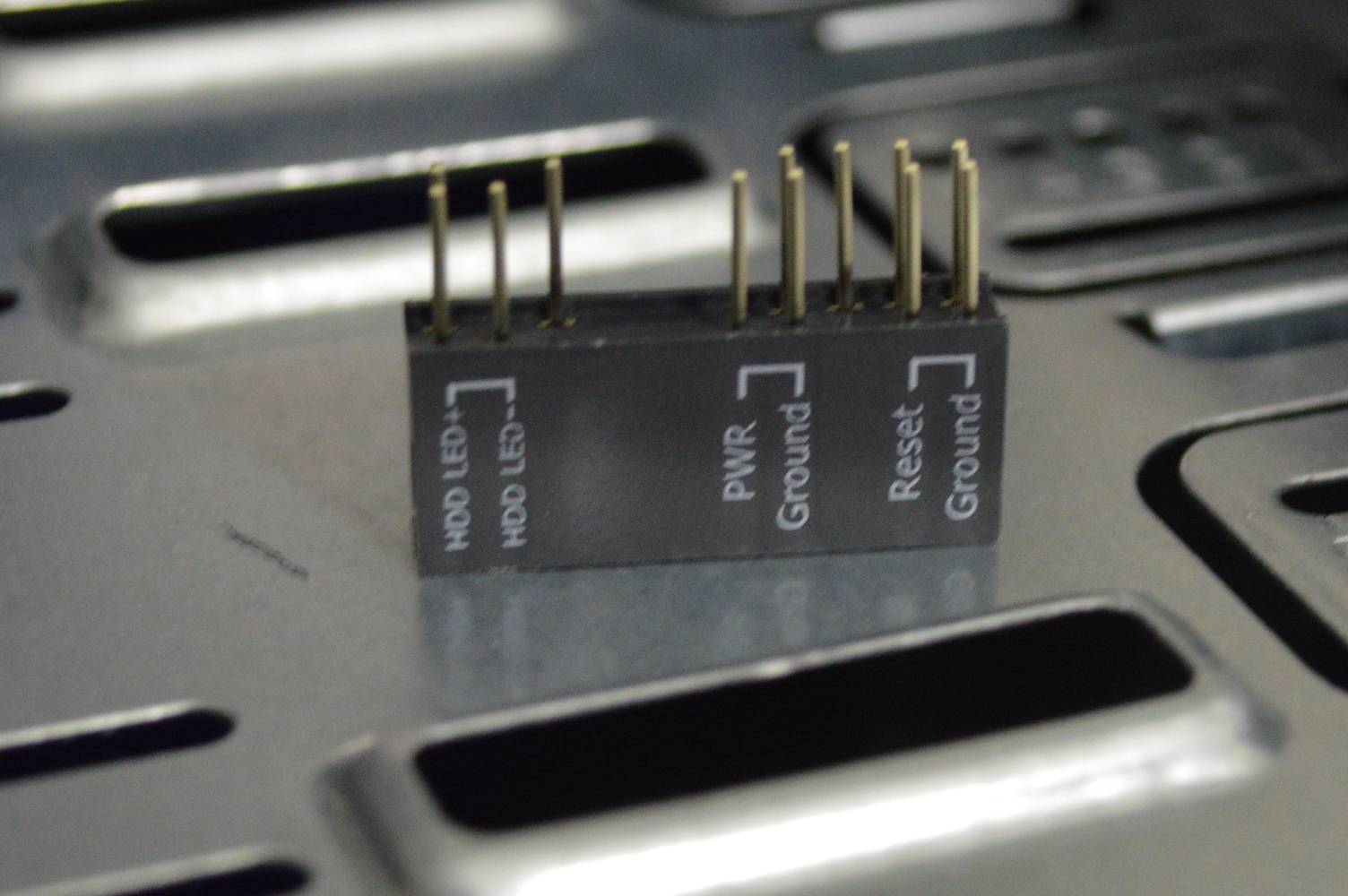

Hot-swap. To use hot-swap bays with the Sabertooth 990FX R2.0 (and other ASUS boards may be the same), enable the option “SATA ESP” for the SATA connectors that will be plugged up to hot-swap bays. Make sure it’s also set to AHCI mode. For the ASMedia (white) connectors, just make sure they are enabled. They are hot-swap by default.

On the hot swap bays themselves, the included fan is fairly quiet. That was one of my primary concerns, but I was willing to change out the fan if I felt necessary, and I’m glad to see that won’t be necessary.

The only criticism is simply the fact all of the connectors are located along the left side when looking at the back. This means you’re almost guaranteed to be working in a tight space when trying to plug this up if you’re going to be putting this in a tower. Many rack chassis also have the drive bays on the right side when looking at the front, again guaranteeing a tight work space. Rosewill could’ve made things a little easier by including a Y-splitter for the two 4-pin Molex power plugs. For the SATA cables, just make sure to plug those into this before putting it into the chassis.

Cool and silent running. The Noctua fan on the cooling tower is quiet as expected, and the cooler performs reasonably well. The UEFI reported the processor sitting shy of 60C after the system had been running MemTest86 for over 18 hours (testing the ECC memory). But the processor isn’t going to be under that kind of load running FreeNAS unless I need to rebuild one of the drives for some reason. But even then, it won’t (or at least shouldn’t) be under that kind of load for 18 hours, so I shouldn’t have to worry about much here.

And that’s it for this part. All that’s left to do now is just select and order the 3U chassis and migrate everything into it, including installing both mobile racks. I’m also going to be changing over to the shielded SATA cables I mentioned earlier, the 3M 5602 series. I’ll just need to determine the lengths.

You must be logged in to post a comment.