I’ve had the wonderful chance over the last couple months to interact with some very arrogant elitists and fanboys. The AMD vs Intel debate to those individuals is over, and the clear and unchallengeable winner is Intel. There was even one person I encountered for whom the words “buyer’s remorse” had likely just entered his vocabulary, as he basically insinuated that anyone who defends the FX-8350 has it, even going so far as to open his post with a link to Wikipedia’s article on post-purchase rationalization — i.e. “buyer’s remorse”:

The 8350 is clearly not the better choice over a locked i5 for gaming, but people cannot stand to believe they’ve made a bad purchase decision. I don’t blame them; it sucks to purchase something and to find out there’s better options out there, but I don’t like how people try to justify their purchases to no end.

Talk about an elitist and arrogant position to take. Better options being available does not make a particular purchase a “bad decision”. Decisions have trade-offs, plain and simple. It doesn’t matter if you’re talking about cars, computers, espresso machines, or what have you. There are tradeoffs going with AMD, and there are tradeoffs going with Intel. There are tradeoffs going with an AMD graphics card, and there are tradeoffs going with nVidia. That doesn’t make either an inherently bad decision, and to act like going with AMD is an inherently bad decision shows the elitist attitude I’ve seen permeate much of this discussion.

On YouTube, there was one Intel fanboy who made this blanket statement: “AMD is one big failure since they made their first product FACT.” Oh brother. I’m guessing this guy thinks the FX processors are AMD’s “first product”. Goes to show no one is immune to dumb comments, regardless of what side you’re on.

AMD and Intel have both been around for a long time. Advanced Micro Devices (AMD) was founded on May 1, 1969, while Intel (derived from “integrated electronics”) was founded on July 18, 1968. In response to the idiot who made the blanket comment, I said this: “Crap since their first product? So tell me your experience with AMD’s 8086 and 8088 processors. Or did those processors come out before you were born?” That wasn’t AMD’s first product, but the point still stands.

In his book The Political Brain: The Role of Emotion in Deciding the Fate of the Nation, Drew Westen said “When reason and emotion collide, emotion invariably wins”. And without any doubt, many throw a lot of emotion at the AMD vs Intel debate and don’t talk rationally on it — and that holds true for both sides.

Let’s explore history a little. For quite a while, AMD and Intel have traded blows. Sure AMD is lagging behind Intel quite a bit right now, having not released anything groundbreaking in several years. Those who want to believe AMD can never do anything right obviously don’t realize that AMD’s pulled quite ahead a few times over the years, and in one case almost left Intel in the dust.

If you’re running the 64-bit version of Windows right now, you actually have AMD to thank on that. AMD developed the x86-64 instruction set targeted by 64-bit versions of Windows. It was renamed AMD64 on release and first implemented with the Opteron processor in April 2003, with the Athlon 64 following in September 2003. It would later become known as x64 to distinguish it from x86. Unlike Intel’s original 64-bit implementation, AMD64 is fully backward compatible to x86, which is why you can run either a 32-bit or 64-bit operating system on x64 processors as if it were a 32-bit processor.

This put Intel in the position of underdog, and basically means you have AMD to thank for the fact 64-bit desktop processors even exist today. But I don’t expect the Intel elitists to actually do that. Wouldn’t surprise me if they’re trying to find any way of disproving what I’ve just said. Intel didn’t release an x64 processor until July 27, 2006, more than a year after the release of the x64 versions of Windows XP Professional and Server 2003, and over 3 years after AMD’s first offering.

Now Intel actually had a 64-bit processor called the Itanium, first released in 2001. It was first announced in 1999, and a nickname was dubbed for it quickly: “Itanic”. Compared to other established options, such as the DEC Alpha, it was a disappointment, and the processor definitely did not live up to the hype, meaning its nickname was quite fitting. The Electronic Engineering Journal tells the story in their article “Sinking the Itanic“. Despite that, the Itanium is still around and still selling, for some reason.

While 64-bit computing has actually been around for quite a long time, AMD brought it to the desktop, and it wasn’t the first race that AMD won either, only the most striking because of the time lag by Intel. AMD was the first to ship a 1 gigahertz desktop processor. While Intel tried to contend they actually beat AMD, evidence suggests AMD beat them to it, and it is generally accepted that AMD won the gigahertz race.

But where AMD has always won out over Intel is price to performance. I don’t believe Intel has ever come close — unless you’re buying your Intel processors used or waiting for price drops to close the gap, but even then that still may not close it enough.

The point is that most trash talkers have likely not used AMD products for long, if at all. It’s a lot like the comments you see on Amazon by buyers who trash-talk an entire company after having a bad experience with one product and cannot understand why people would actually give 4-star or 5-star reviews. AMD has contributed significantly to the current state of desktop computing.

Today they’re lagging behind Intel, but that doesn’t erase the history AMD has made.

Bottlenecking

One thing I’ve seen way too much is the use of bumper sticker thinking by many of those in the “millennial” generation. With AMD vs Intel, this is no different. Arrogant, elitist people with superiority complexes armed with terms and phrases they understand only to the point that the phrases basically mean “Intel rules” take to the web in various forums to shout down anyone who dares speak favorably of AMD.

Which brings me to “bottlenecking” — arguably the most misunderstood and misused term when it comes to talking about computers.

Anyone who is familiar with business processes and process and operational management knows the term. With computing I’ve come to the conclusion that most who use the term don’t understand it. They know only what they’ve read: “AMD CPUs will bottleneck graphics cards”. Since bottleneck sounds like a bad thing, and the ignorant Intel owner is looking for a new reason to feel superior to his AMD counterparts, he automatically thinks that Intel doesn’t bottleneck graphics cards, assumes AMD always bottlenecks graphics cards, and so throws around the word as if it actually means something.

The term bottleneck, of course, comes from the neck of a bottle: the thinner neck greatly inhibits the flow of liquid leaving it. A similar term from military strategy is is “choke point“. In business, a bottleneck is an inefficiency that slows down an entire process and keeps it from operating at peak capacity. It’s a very important concept to understand from a management perspective.

And it applies perfectly to computers. Your system is full of bottlenecks. An Intel processor will not eliminate bottlenecks, so don’t even bother trying to say such.

Storage is easily the most constricting bottleneck for your system. The read and write throughputs will always hold back your system. The fact that CD, DVD and BluRay drives are always significantly slower than even HDDs is why optical drive emulation started coming into vogue about 10 years ago — you can drastically improve game performance by emulating a CD/DVD image instead of using the physical disk. Having an SSD curtails the storage bottleneck even more.

Memory is also a bottleneck. Your processor is always going to be significantly faster than your RAM. Anyone familiar with low-level programming knows that the CPU can perform operations faster on registers than memory. Compiler optimizations take this into account as best as possible, which leads to the common notion that you shouldn’t try to outsmart the compiler, because you cannot do it.

In short any component in your system can be a bottleneck. Having an Intel processor does not change this. Period.

Let’s talk graphics. The common definition of a bottlenecked GPU is one that doesn’t constantly operate at 100% usage while in a game. And with this demonstrably incorrect definition come a number of implications, conveniently always levied against AMD as well. “You’re not getting full performance out of your GPU when using an AMD processor” is the typical argument. The question they never want to examine is whether that actually matters.

Intel elitists always overstate the concern as well. They imply the GPU not operating at 100% usage is a big problem, which it’s not as I’ll show in a moment, while also implying that an AMD system cannot see any kind of performance gain by upgrading to a newer graphics card or to multiple graphics cards, which is demonstrably untrue.

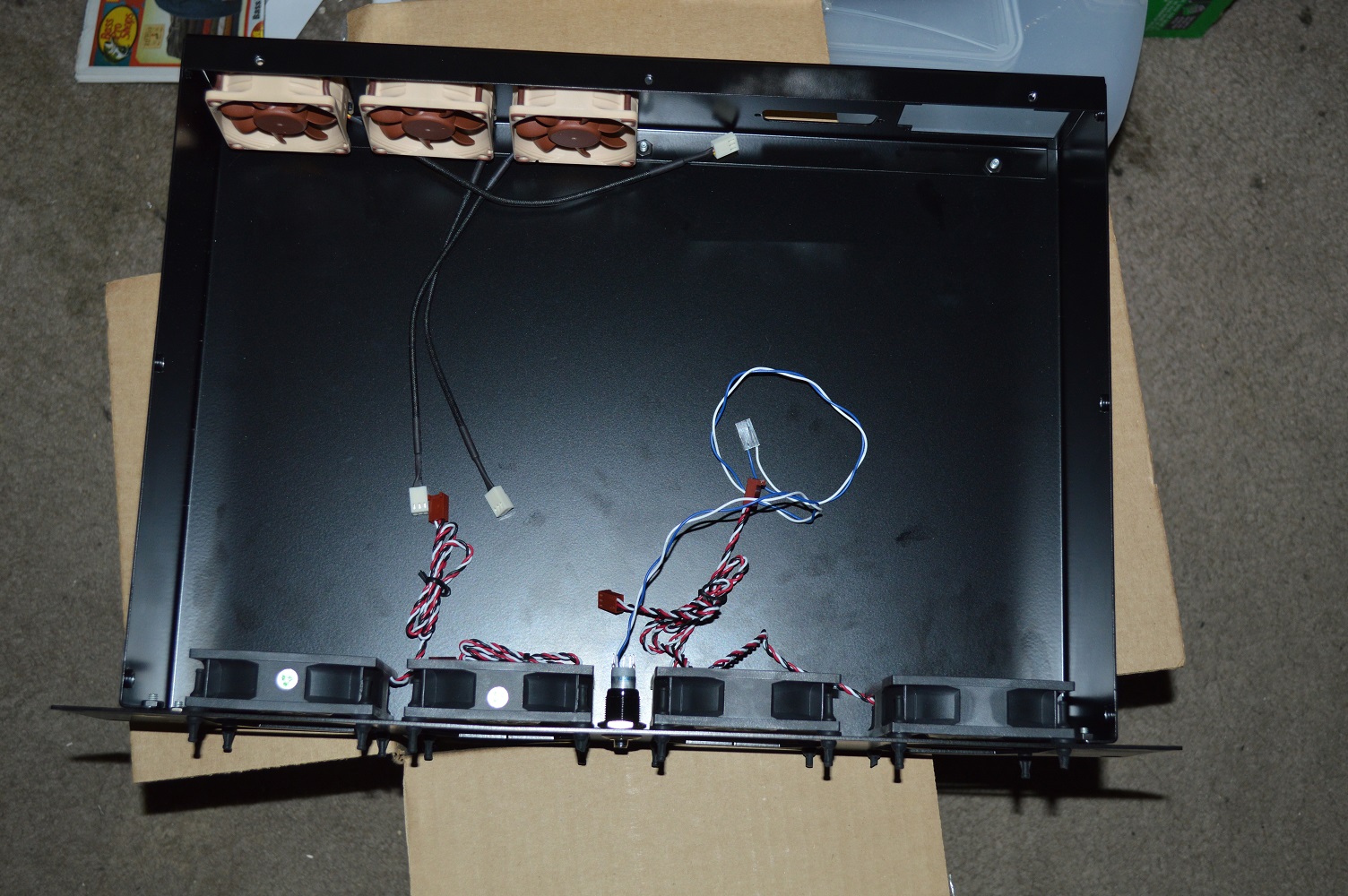

In Beta Orionis, I have two GTX 770s in SLI. Currently I’m working through Bioshock Infinite. In game GPU usage will fluctuate from as low as 30% to nearing or reaching 100% depending on where I am. Many would say that this shows my graphics cards are bottlenecked because their usage never stays at 100%. What they never take into account that the GPU may not need to be used at 100%.

JayzTwoCents made a video back in August 2013 where he demonstrated a bottleneck. He wound down his i7-3770K processor to the equivalent of a 1.8 GHz i3 processor running with a single GTX 680 graphics card and demonstrated what a bottleneck actually looks like. Quite simply it was a high CPU usage but low GPU usage. In the video, he showed GPU usage hovering around 50% while the CPU usage was significantly higher. That’s a bottleneck.

If your GPU usage isn’t maxing out, but your CPU usage also isn’t maxing out, and you’ve got the settings on your game cranked up as high as they’ll go, that’s not a bottleneck. It’s just a game that isn’t challenging your system to its limits. It’s not automatically a bottleneck if your graphics cards aren’t maxed out — yet too many people who don’t understand what bottlenecks actually are will say otherwise. “Well you’re not getting the maximum value of your graphics card if it’s not maxed out”. Well, perhaps it’s not being maxed out because it doesn’t need to be.

Watching frame rates in Bioshock Infinite with FRAPS with the game maxed out on its graphics settings, V-sync off as well, I always get at least 60 frames per second, with extended periods in the triple digits. Talk to an Intel elitist and they’ll probably tell you what I’ve just said is impossible, or they’ll pull a variant of the “pics or it didn’t happen” trope. Watching the CPU usage on my FX-8350 versus GPU usage, the GPU usage never hit 100%, but neither did CPU usage on any core. The game’s engine spread out its processing across the cores, and SLI allowed its graphics processing to be spread out across both GPUs. If I had only one graphics card, I’d expect it to be maxed out, but as I have multiple graphics cards, it isn’t maxed out.

When I disable SLI, the single graphics card is maxed out, and we see the typical fluctuations in usage percentage that would be expected during the course of a game, with the CPUs not having any problem keeping up. So clearly the FX-8350 isn’t being any kind of bottleneck for the GTX 770, either single or dual in SLI. I have no reason to believe it’ll be one for any other graphics card currently on the market.

Which brings me to arguably the largest bottleneck of any gaming system: the game itself, specifically how it’s engineered. While faster hardware can get around poorly designed or implemented software, it’s an uphill battle, possibly requiring significant improvements in hardware to get noticeable results.

Drivers and the operating system can also contribute to or exacerbate any observed bottleneck.

Bottlenecks have numerous causes, numerous points for investigation or solution, yet most Intel elitists will readily assume it’s the processor if it’s learned the person is running AMD.

The biggest reason to analyze bottlenecks is not just to determine the extent to which they exist, but the cost to alleviating them compared to the capacity that is gained. Any money that is going to be spent needs to be balanced by a commensurate increase in value and/or capacity. Even businesses are willing to live with bottlenecks in processes if it’s determined alleviating the bottleneck won’t result in a desirable return.

As such if you already have an AMD FX system, then it makes absolutely no sense changing over to an Intel system, in my opinion. Sure doing so would potentially alleviate a bottleneck, and while the determination of whether the expense is worth the upgrade is entirely up to you, I don’t see it as being worth it. The gains, which could be rather meager, don’t justify the costs. After all, if the gain in performance is one you’re likely to not notice, why undergo the time and expense of changing over? If you have a water cooled system, the expense is greater as you have to change not just the mainboard and CPU (memory can likely be re-used), but the water block as well.

Instead ask yourself this: what kind of performance are you seeking, is your desire reasonable, and can your system deliver it? In my case, the answer is an overwhelming yes. And with most other games I’m going to be playing, I see no reason to not believe that will hold true.

What matters in gaming is the minimum frame rate. So long as your system can deliver at least the refresh rate of your monitor at its highest resolution, you’re golden. And if it can do that with all settings in your game maxed out, even better. And, again, AMD paired with a good graphics card can deliver that.

But again, talk to any Intel elitist and they’ll likely say that is impossible. I’ve seen the comments all over the place.

Benchmarks

Benchmark scores have become little more than tokens of superiority — “yeah, well, my system can break 10000 on 3DMark Fire Strike while yours can’t”.

The problem with benchmarks is how misleading they can be at times. All benchmarks being synthetic, they measure a static block of code — be it an algorithm or 3D scene. This eliminates variables and gives a good base against which performance comparisons can be made. If you’re overclocking, they help determine if you’re gaining ground.

But here’s where many end up getting led astray: they focus only on one or a few scores. I’ve seen it a lot.

Using one benchmark to determine the true performance comparison between platforms is like taking a person’s time in a mile run and using it to extrapolate out to entire marathon. For some, it will be an accurate extrapolation. For most, though, not quite. And the same with benchmarks.

This is why no reputable review site posts only a couple benchmark numbers. They tend to provide a wide range of benchmarks and frame rates. And here is where you learn one key detail: despite claims to the contrary, Intel does not have an absolute advantage over AMD, and where Intel does have the advantage, it’s not as striking as many believe.

This doesn’t stop the Intel elitists, and I’m sure I’ve only just enraged them even more.

TweakTown made a performance comparison of the FX-8350 vs the i7 4930K running GTX 780s in SLI and GTX 980s in SLI at 4K. In all measurements, the AMD processor gave the better scores. Unsurprisingly, the results where challenged by commenters. Many said that they should’ve used the i7 4770K instead. Kenny Rucka Jarvis made a prediction: “They should have compared it to a 4770k or 4790k which would have blown the 8350 out of the water.”

TweakTown did just that, and the results showed the i7 winning out, but it didn’t “blow the 8350 out of the water”. TweakTown had this to say:

Spending an additional $180, or another 50% or so on top of the AMD combo, results in some decent improvements in frame rate. The issue again is, the performance increase is only around ~10% on average, while you’re spending 50% more money. Some games are scaling much better, with improvements of 15-20%, but still – you’re spending $180 more.

Again the performance gain was not that significant in what they tested. While in some games the performance difference may be a bit more pronounced, look at the frame rates — in most cases the AMD processor was quite capable of keeping up with the Intel processor on both minimums and averages. Sure the 4770k won out, but it didn’t “blow the 8350 out of the water”.

In the comments to the 4K challenge involving the 4930K, Facebook commenter Gary Lefever observed “Show an AMD CPU in a positive light and the butthurt Intel warriors come out in droves! You’d think someone was talking about their mothers or something.” Pavan Biliyar responded:

I agree, although in defense of the butthurt, this article is somewhat biased, though not intentionally. All games tested were GPU-bound in addition to running them at really high resolutions and details, and likely single-player– all of which puts very little emphasis on impact of CPU. GPU-bound tends to favor AMD platforms by winning on perf/$, but they also defeat the purpose of upgrading as platforms from several year ago would end up with similar performance, whether we’re talking about a Phenom x6 or Core i5-700. Playing GPU-bound makes upgrade gain percentage per dollar unreasonable.

If you want a reason to upgrade the CPU, you should be playing CPU-bound, and therein lies a simple fix to make the butt hurt happy: CPU-bound scenarios will embarrass AMD every time. Trouble is that CPU-bound doesn’t favor fancy graphics card combinations if it even has proper multi-GPU support. I mean, that’s the whole idea, it’s CPU-bound. Except now we’re accidentally offending those butt hurt over their $350+ graphics setups, we’re not allowed to tell them they spent too much.

Of course getting more than one display can compensate and give those expensive graphics something to do, all while justifying an Intel platform– but wait, now we’re getting into a demographic that wouldn’t settle on AMD because it isn’t like they are strapped for cash. Making the comparison at this stage doesn’t make much sense. Although, I’d like to see a surround 4K review by Anthony Garreffa testing both platforms with maybe more graphics cards to compensate. Frame rates may be unplayable, but I’m more interested in how each platforms scales for their total price.

That being said, the rich stay rich because they are picky with their money, they don’t take the ‘spared no expense’ route– except when they go out and get Apple laptops. The majority of any salary bracket aren’t enthusiasts.

And that’s certainly a very striking observation. The majority of people who build a computer don’t care about anything more than getting what they need at the right price, and the question will come down to what will meet their needs. Benchmarks won’t tell you whether something will meet your needs, as benchmarks are merely performance comparisons involving static blocks of code, and so can lead people astray or cause them to overspend by leaps and bounds, meaning they’re not getting the most for their money.

Gamers are probably going to be a little more involved in their purchase decisions, but most computer buyers aren’t. Enthusiast gamers are the ones to avoid, in my opinion, as most that I’ve encountered don’t have any capability of thinking with real costs in mind. I said this on the Linus TechTips forum:

I’m not going for super ultra-high resolutions with framerates faster than your eye can see, let alone what my monitors can actually display. Obviously if you’re going for that, you’re not going to be running AMD, but you’re also not going to be running a 3rd generation Intel, and probably not even a 4th generation. You’re probably going to have a 5th generation Intel with multiple 980s.

Later in that post I followed up by saying “So the question comes down to what performance are you seeking, and can your system deliver it? If no, then figure out what to upgrade.”

The additional question is why you’re seeking that kind of performance. Are you merely trying to improve benchmark scores, or is your system no longer capable of delivering what you actually need, not what you think you need? Are you trying to compete with others purely on the egotistical notion of being able to brag about your system and the benchmarks and frame rates it can achieve, or can you save money and actually buy a system capable of delivering a decent experience that won’t send your bank account into the red or max out credit cards?

Conclusions

Now many Intel enthusiasts, or “Intel warriors” as mentioned earlier, will probably look at all of this and call it one giant case of “buyer’s remorse”: “Wow, you wrote all of that. That’s a lot of guilt over a bad purchase. Stop trying to defend your bad choices.” Creationists have said similar about those who defend evolution, calling it “going to a lot of effort to disprove God”. Many Intel enthusiasts have also adopted the point of view that it doesn’t matter what AMD puts out, it’s crap and should be avoided at all costs — similar to how many anti-vaxxers will almost automatically be against anything labeled a “vaccine”.

Or they might cop out and say “well you’re experience isn’t typical”, but the only thing atypical about my setup is the SLI configuration — that and the fact I’m using a 32″ television for a monitor (and it works quite well!).

The thing is that Intel doesn’t provide significant performance gains over AMD in virtually every measurement I’ve seen. It’s better, but not so significantly better as to, in my opinion, justify the cost. And an AMD FX processor will not bottleneck a graphics card!

Back in June of last year, I said this to a friend of mine on Facebook:

It’s like gamers saying “Yeah well, my system can do BF4 at 150fps, which demolishes yours which can only do 80fps”. Okay…. but can you tell the difference between 150fps and 80fps, or will both appear to be smooth renderings to the casual observer?

The AMD vs Intel gaming debate is just one giant dick-measuring contest, in which benchmarks and frame rates are substitutes for inches in length or girth, and claims that AMD will “bottleneck graphics cards” or is otherwise substandard are the substitute for insinuating a guy has a small prick, or can’t get a woman off, or what have you…

Whether a particular need can be adequately met by an AMD CPU is ignored in favor of pointing out that Intel can do it better. “I can get 150fps in Battlefield 4 while you can only get 80fps” is the equivalent of saying “I’ll be she can cum a lot harder with my cock!” Yes, I’m intentionally making sexual remarks to show the absurdity and irrelevance of this whole discussion and the degree to which it’s blown out of proportion. The discussion has long ago lost any sense of rationality or sanity and has turned, in essence, into a substitute for competitions over prick size and sexual ability. (Perhaps that’s why I have no qualms going with AMD, as I have no problems satisfying my wife…)

Does it ultimately matter that Intel can do it better? If you answer that question in the affirmative, you need to rethink your perspective. For most, it won’t matter. For the relative few, they’re already aware it matters, and they already have other requirements that can only be met with higher-end hardware.

As I said on the Linus TechTips forum, quoted above, if you want to be able to play every game on the market maxed out at the highest resolutions, you’re not running an AMD processor. You’re probably not even running a 4th generation Intel (4xxx series i5 or i7). You’re probably running the i7-5960X in a system with two or three GTX 980s, and you’re probably waiting with eager anticipation the release of the Titan X and Broadwell — and everything is probably water-cooled and overclocked as far as you can take it.

In other words, if you’re a performance enthusiast, money is likely no object. And when money is no object, there is no competition possible. It’s a notion that we see time and time and time again, not just with computing but with everything else in life.

For everyone else, AMD is a viable option, so don’t overlook them. Yes the processor is a couple years old, but it’s still quite a contender. For gamers the GPU matters more anyway and, again, an FX processor will not bottleneck a graphics card — but that won’t stop elitists from continually saying it will.

You must be logged in to post a comment.